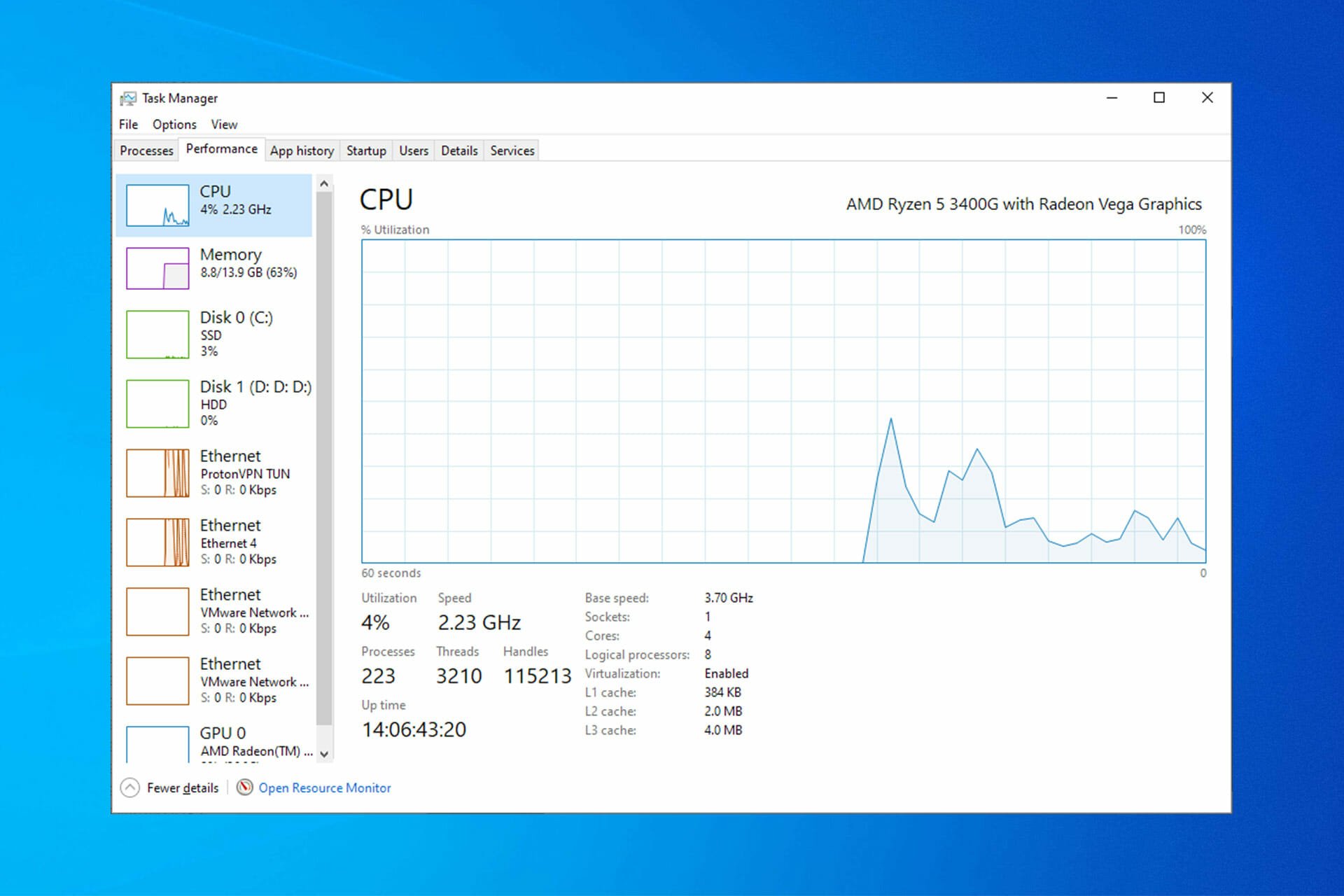

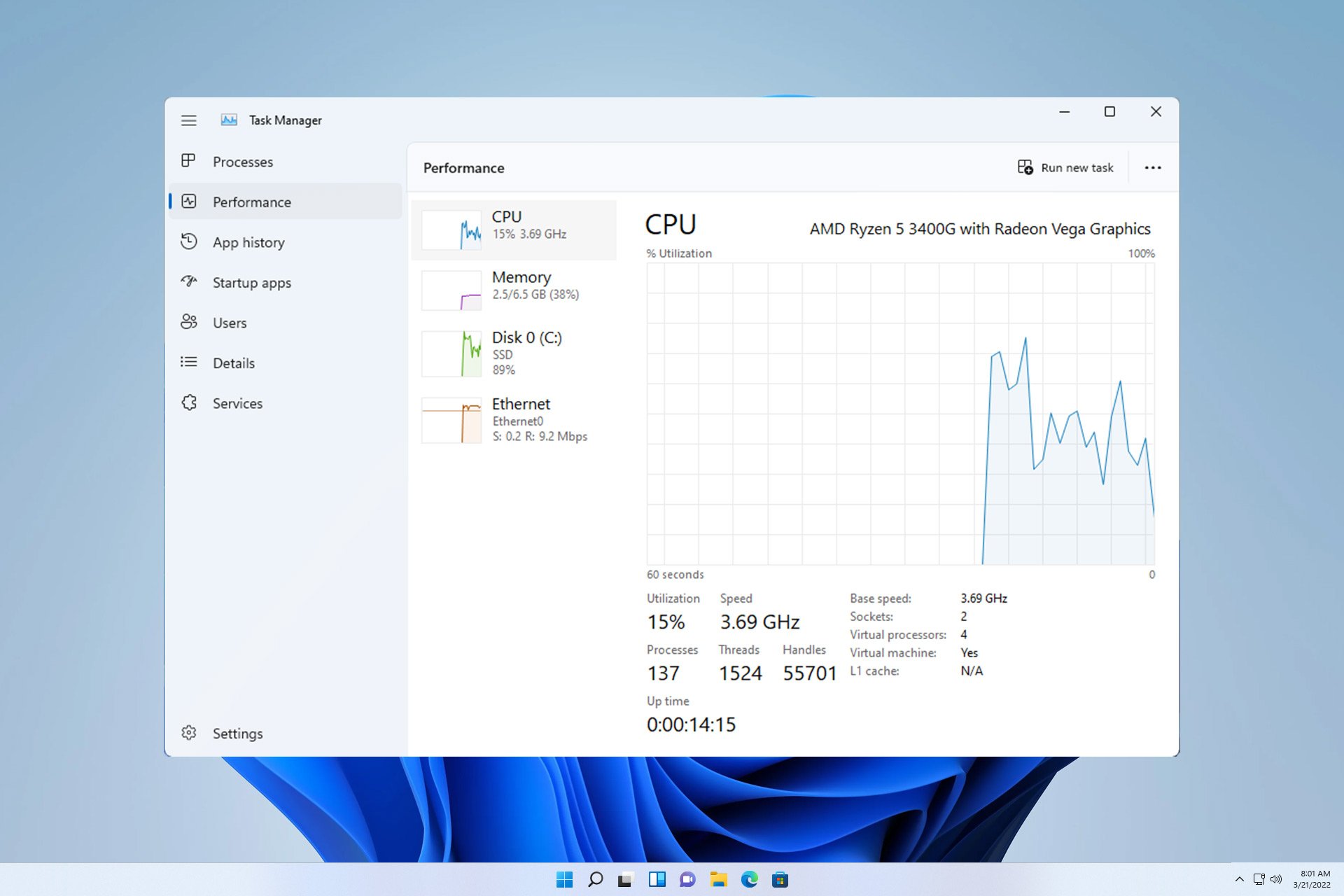

Please note that the game is both graphics- and processor-intensive, so make sure these components meet or exceed the minimum requirements.The new CPU optimization feature overrides Windows' core scheduler, forcing Cyberpunk 2077 to run on the CPU's performance cores — no matter what. We took the new feature for a spin, but we ran into some performance problems.And an RTX 3060. And you do want to play cyberpunk. At 60 frames per second. Then you definitely can this looks pretty okay I mean it's uh it definitely keeps up better than 1440p with Ray tracing.

Can rtx 4060 run Cyberpunk 2077 : Again, Cyberpunk 2077 is a bit of a demanding bugger, and Frame Generation enables the RTX 4060 to pull off big 1080p moves.

Is Cyberpunk more CPU or GPU

It seems version 2.1 is a bit more CPU intensive – even though it's mostly GPU intensive still – but I'm not sure how much. I'm trying to keep the fps over 75 because I've vlocked to that frequency.

Is Cyberpunk CPU or GPU dependent : Cyberpunk 2077 is both CPU and GPU intensive. The game's world is richly detailed, which requires a powerful GPU to render high-resolution graphics. Additionally, the game's complex AI and physics simulations heavily rely on the CPU's processing power.

If you just want to play the game, perhaps without ray tracing, older 4-core and 6-core CPUs should suffice. Your GPU of choice will also play a role, as mainstream parts like an RTX 3060 or RX 6700 XT aren't likely to need as potent of a CPU to keep them busy. As shown through my testing, it can handle the demands of modern games with a few compromises, and hopefully that holds true for a few more years. Even a game like Alan Wake 2 is playable if you turn down enough settings. Still, an RTX 3060 isn't a great investment if you're buying a GPU in 2024.

Can rtx 4070 run Cyberpunk 2077

This is probably the way to go and last but not least 4K resolution this shouldn't be a problem with the 4070. It's a more than capable graphics. Card. But it's cyberpunk.Bottom Line: Nvidia's GeForce RTX 4060 Ti is exceptional for gaming at 1080p, but it's limited at higher resolutions by its cut-back bandwidth, making this graphics card a tougher sell for anything more demanding.And finally, if you're playing at 4K or beyond, the GeForce RTX 3080 and GeForce RTX 3090 deliver the performance to play Cyberpunk 2077 with ray tracing at Ultra at this incredibly demanding resolution. Phantom Liberty is expected to hit 90% CPU usage on 8-core chips. Cyberpunk 2077 developer Filip Pierściński has revealed on X (Twitter) that the game's upcoming Phantom Liberty DLC will be extremely demanding on system hardware, particularly CPUs, with native support for 8-core processors.

Does Cyberpunk rely on CPU : Cyberpunk 2077 is both CPU and GPU intensive. The game's world is richly detailed, which requires a powerful GPU to render high-resolution graphics. Additionally, the game's complex AI and physics simulations heavily rely on the CPU's processing power.

Does Cyberpunk use a lot of CPU : In this case, 8-core CPUs may see utilization at 90%, which is expected and should not surprise gamers who may be surprised how much of the CPU power is used by the game."

Can my GPU run Cyberpunk

The minimum GPU for Ray Tracing, at 1920×1080, is the GeForce RTX 2060. Gamers will explore Night City with Medium Ray Tracing Preset powered by DLSS. At 2560×1440, we recommend a GeForce RTX 3070 or GeForce RTX 2080 Ti. In this case, 8-core CPUs may see utilization at 90%, which is expected and should not surprise gamers who may be surprised how much of the CPU power is used by the game."Released four years ago, the NVIDIA RTX 3060 is still a high-quality GPU for mid-tier gaming and budding content creators.

Is RTX 3060 obsolete : In conclusion, we can say that the Nvidia GeForce RTX 3060 is still a solid choice in 2024 if you can get hold of a good custom design for under $300, especially if you prioritize Nvidia features and a large memory capacity.

Antwort Why is Cyberpunk using CPU instead of GPU? Weitere Antworten – Is Cyberpunk CPU heavy or GPU heavy

Please note that the game is both graphics- and processor-intensive, so make sure these components meet or exceed the minimum requirements.The new CPU optimization feature overrides Windows' core scheduler, forcing Cyberpunk 2077 to run on the CPU's performance cores — no matter what. We took the new feature for a spin, but we ran into some performance problems.And an RTX 3060. And you do want to play cyberpunk. At 60 frames per second. Then you definitely can this looks pretty okay I mean it's uh it definitely keeps up better than 1440p with Ray tracing.

Can rtx 4060 run Cyberpunk 2077 : Again, Cyberpunk 2077 is a bit of a demanding bugger, and Frame Generation enables the RTX 4060 to pull off big 1080p moves.

Is Cyberpunk more CPU or GPU

It seems version 2.1 is a bit more CPU intensive – even though it's mostly GPU intensive still – but I'm not sure how much. I'm trying to keep the fps over 75 because I've vlocked to that frequency.

Is Cyberpunk CPU or GPU dependent : Cyberpunk 2077 is both CPU and GPU intensive. The game's world is richly detailed, which requires a powerful GPU to render high-resolution graphics. Additionally, the game's complex AI and physics simulations heavily rely on the CPU's processing power.

If you just want to play the game, perhaps without ray tracing, older 4-core and 6-core CPUs should suffice. Your GPU of choice will also play a role, as mainstream parts like an RTX 3060 or RX 6700 XT aren't likely to need as potent of a CPU to keep them busy.

As shown through my testing, it can handle the demands of modern games with a few compromises, and hopefully that holds true for a few more years. Even a game like Alan Wake 2 is playable if you turn down enough settings. Still, an RTX 3060 isn't a great investment if you're buying a GPU in 2024.

Can rtx 4070 run Cyberpunk 2077

This is probably the way to go and last but not least 4K resolution this shouldn't be a problem with the 4070. It's a more than capable graphics. Card. But it's cyberpunk.Bottom Line: Nvidia's GeForce RTX 4060 Ti is exceptional for gaming at 1080p, but it's limited at higher resolutions by its cut-back bandwidth, making this graphics card a tougher sell for anything more demanding.And finally, if you're playing at 4K or beyond, the GeForce RTX 3080 and GeForce RTX 3090 deliver the performance to play Cyberpunk 2077 with ray tracing at Ultra at this incredibly demanding resolution.

Phantom Liberty is expected to hit 90% CPU usage on 8-core chips. Cyberpunk 2077 developer Filip Pierściński has revealed on X (Twitter) that the game's upcoming Phantom Liberty DLC will be extremely demanding on system hardware, particularly CPUs, with native support for 8-core processors.

Does Cyberpunk rely on CPU : Cyberpunk 2077 is both CPU and GPU intensive. The game's world is richly detailed, which requires a powerful GPU to render high-resolution graphics. Additionally, the game's complex AI and physics simulations heavily rely on the CPU's processing power.

Does Cyberpunk use a lot of CPU : In this case, 8-core CPUs may see utilization at 90%, which is expected and should not surprise gamers who may be surprised how much of the CPU power is used by the game."

Can my GPU run Cyberpunk

The minimum GPU for Ray Tracing, at 1920×1080, is the GeForce RTX 2060. Gamers will explore Night City with Medium Ray Tracing Preset powered by DLSS. At 2560×1440, we recommend a GeForce RTX 3070 or GeForce RTX 2080 Ti.

In this case, 8-core CPUs may see utilization at 90%, which is expected and should not surprise gamers who may be surprised how much of the CPU power is used by the game."Released four years ago, the NVIDIA RTX 3060 is still a high-quality GPU for mid-tier gaming and budding content creators.

Is RTX 3060 obsolete : In conclusion, we can say that the Nvidia GeForce RTX 3060 is still a solid choice in 2024 if you can get hold of a good custom design for under $300, especially if you prioritize Nvidia features and a large memory capacity.