CUDA is designed to work with programming languages such as C, C++, Fortran and Python. This accessibility makes it easier for specialists in parallel programming to use GPU resources, in contrast to prior APIs like Direct3D and OpenGL, which required advanced skills in graphics programming.CUDA's kernel execution was also consistently faster than OpenCL's, despite the two implementations running nearly identical code. CUDA seems to be a better choice for applications where achieving as high a performance as possible is important.While CUDA cores are more specialized than other types of cores, they offer a significant performance boost for certain types of applications such as time-intensive workloads, gaming, and deep learning. If your application can benefit from parallel computing, then CUDA cores can offer a major performance advantage.

What is the benefit of CUDA : Benefits and Applications of CUDA

Performance Boost: CUDA leverages parallelism for significant speedup. Scientific Computing: Used in simulations, fluid dynamics, quantum chemistry, and more. Deep Learning: Accelerates training and inference in neural networks. Data Analytics: Speeds up data processing and analysis.

Is CUDA faster than CPU

The CUDA (Compute Unified Device Architecture) platform is a software framework developed by NVIDIA to expand the capabilities of GPU acceleration. It allows developers to access the raw computing power of CUDA GPUs to process data faster than with traditional CPUs.

Is CUDA a monopoly : NVIDIA Corporation : Silicon Valley wants to break Nvidia's CUDA software monopoly. Technology giant Nvidia, known for its cutting-edge artificial intelligence (AI) chips, finds itself at a crossroads.

Cuda offers faster rendering times by utilizing the GPU's parallel processing capabilities. RTX technology provides real-time ray tracing and AI-enhanced rendering for more realistic and immersive results. This means that complex machine learning models can be trained and executed much faster, reducing time and costs. Furthermore, CUDA enables efficient memory management and data movement between the CPU and GPU, optimizing the utilization of available resources.

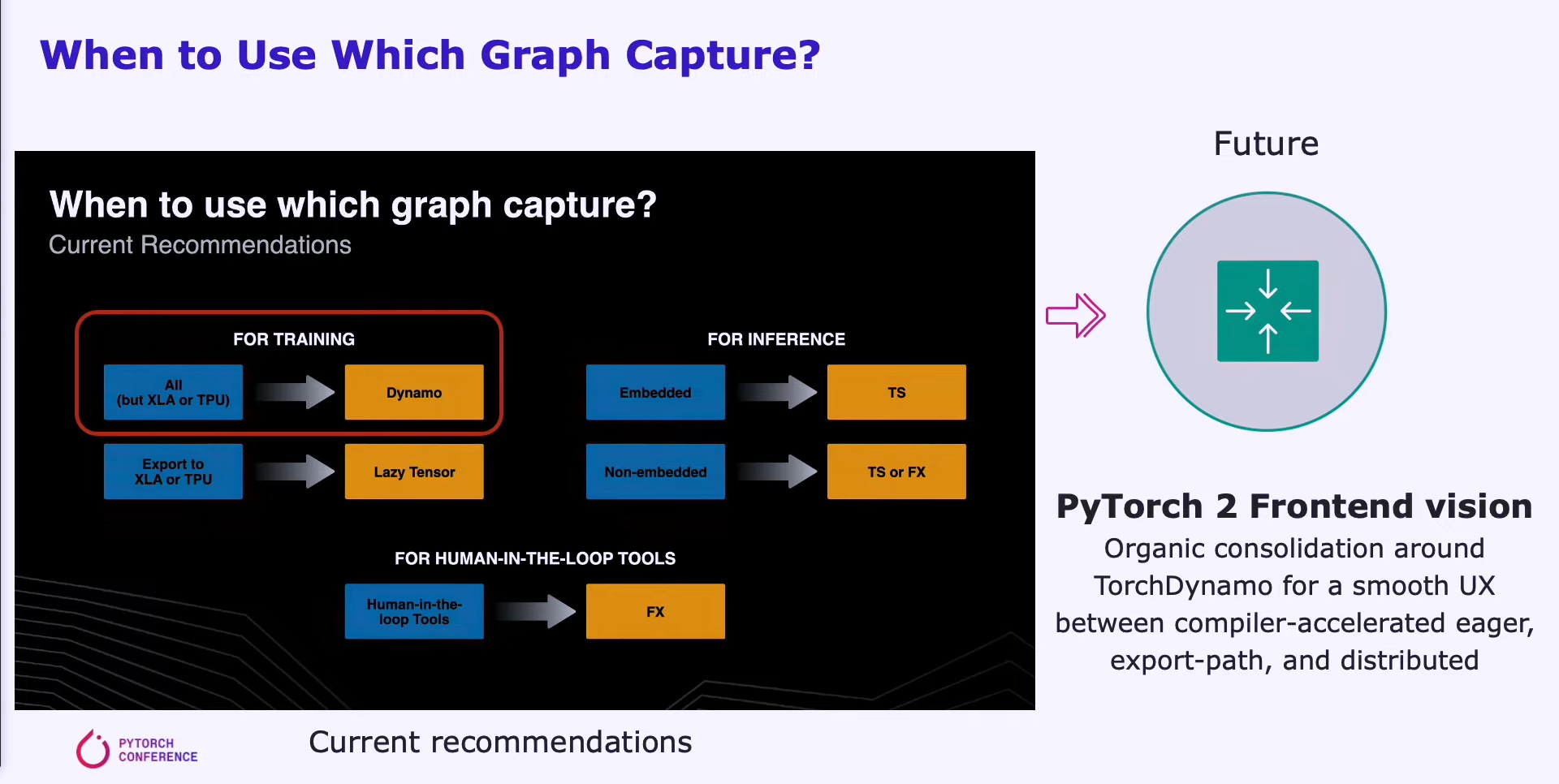

Is PyTorch faster on CUDA

The fastest PyTorch on the GPU is 1.8s (both torch. compile and jit. script ), compared to 0.3s for CUDA.Nvidia's moat is dependent on its CUDA software stack. Now behind in AI chips, Intel and a group of tech heavyweights are teaming up to disrupt CUDA's hold on the industry. Will the new open source software effort work We should find out by the end of the year.The CUDA programming language and the cuDNN-X library for deep learning provide a base on top of which developers have created software like NVIDIA NeMo, a framework to let users build, customize and run inference on their own generative AI models. For instance, in gaming, CUDA cores can render graphics more quickly and efficiently, leading to smoother gameplay and more realistic visuals.

Who is Nvidia’s biggest rival : Nvidia has identified Chinese tech company Huawei as one of its top competitors in various categories such as chip production, AI and cloud services.

Can AMD run CUDA : Unmodified NVIDIA CUDA apps can now run on AMD GPUs thanks to ZLUDA.

Are CUDA cores like CPU cores

While both CUDA cores and CPU cores are responsible for executing computational tasks, they differ significantly in their design, architecture, and intended use cases. Understanding these differences is crucial for determining the most suitable processing unit for a specific task. So, even if other big tech players continue their chip development efforts, Nvidia is likely to remain the top AI chip player for quite some time. Japanese investment bank Mizuho estimates that Nvidia could sell $280 billion worth of AI chips in 2027 as it projects the overall market hitting $400 billion.Of course, while Tesla does have a custom FSD chip on board its vehicles, it also uses Nvidia chips to for training those AI autonomy models. Tesla CEO Elon Musk has pointed to Tesla's full self-driving mode as a key determinant of Tesla's future value.

Is CUDA programming hard : CUDA has a complex memory hierarchy, and it's up to the coder to manage it manually; the compiler isn't much help (yet), and leaves it to the programmer to handle most of the low-level aspects of moving data around the machine.

Antwort Why is CUDA so popular? Weitere Antworten – What makes CUDA so special

CUDA is designed to work with programming languages such as C, C++, Fortran and Python. This accessibility makes it easier for specialists in parallel programming to use GPU resources, in contrast to prior APIs like Direct3D and OpenGL, which required advanced skills in graphics programming.CUDA's kernel execution was also consistently faster than OpenCL's, despite the two implementations running nearly identical code. CUDA seems to be a better choice for applications where achieving as high a performance as possible is important.While CUDA cores are more specialized than other types of cores, they offer a significant performance boost for certain types of applications such as time-intensive workloads, gaming, and deep learning. If your application can benefit from parallel computing, then CUDA cores can offer a major performance advantage.

What is the benefit of CUDA : Benefits and Applications of CUDA

Performance Boost: CUDA leverages parallelism for significant speedup. Scientific Computing: Used in simulations, fluid dynamics, quantum chemistry, and more. Deep Learning: Accelerates training and inference in neural networks. Data Analytics: Speeds up data processing and analysis.

Is CUDA faster than CPU

The CUDA (Compute Unified Device Architecture) platform is a software framework developed by NVIDIA to expand the capabilities of GPU acceleration. It allows developers to access the raw computing power of CUDA GPUs to process data faster than with traditional CPUs.

Is CUDA a monopoly : NVIDIA Corporation : Silicon Valley wants to break Nvidia's CUDA software monopoly. Technology giant Nvidia, known for its cutting-edge artificial intelligence (AI) chips, finds itself at a crossroads.

Cuda offers faster rendering times by utilizing the GPU's parallel processing capabilities. RTX technology provides real-time ray tracing and AI-enhanced rendering for more realistic and immersive results.

This means that complex machine learning models can be trained and executed much faster, reducing time and costs. Furthermore, CUDA enables efficient memory management and data movement between the CPU and GPU, optimizing the utilization of available resources.

Is PyTorch faster on CUDA

The fastest PyTorch on the GPU is 1.8s (both torch. compile and jit. script ), compared to 0.3s for CUDA.Nvidia's moat is dependent on its CUDA software stack. Now behind in AI chips, Intel and a group of tech heavyweights are teaming up to disrupt CUDA's hold on the industry. Will the new open source software effort work We should find out by the end of the year.The CUDA programming language and the cuDNN-X library for deep learning provide a base on top of which developers have created software like NVIDIA NeMo, a framework to let users build, customize and run inference on their own generative AI models.

For instance, in gaming, CUDA cores can render graphics more quickly and efficiently, leading to smoother gameplay and more realistic visuals.

Who is Nvidia’s biggest rival : Nvidia has identified Chinese tech company Huawei as one of its top competitors in various categories such as chip production, AI and cloud services.

Can AMD run CUDA : Unmodified NVIDIA CUDA apps can now run on AMD GPUs thanks to ZLUDA.

Are CUDA cores like CPU cores

While both CUDA cores and CPU cores are responsible for executing computational tasks, they differ significantly in their design, architecture, and intended use cases. Understanding these differences is crucial for determining the most suitable processing unit for a specific task.

So, even if other big tech players continue their chip development efforts, Nvidia is likely to remain the top AI chip player for quite some time. Japanese investment bank Mizuho estimates that Nvidia could sell $280 billion worth of AI chips in 2027 as it projects the overall market hitting $400 billion.Of course, while Tesla does have a custom FSD chip on board its vehicles, it also uses Nvidia chips to for training those AI autonomy models. Tesla CEO Elon Musk has pointed to Tesla's full self-driving mode as a key determinant of Tesla's future value.

Is CUDA programming hard : CUDA has a complex memory hierarchy, and it's up to the coder to manage it manually; the compiler isn't much help (yet), and leaves it to the programmer to handle most of the low-level aspects of moving data around the machine.