CUDA is based on C and C++, and it allows us to accelerate GPU and their computing tasks by parallelizing them. This means we can divide a program into smaller tasks that can be executed independently on the GPU. This can significantly improve the performance of the program.The CUDA (Compute Unified Device Architecture) platform is a software framework developed by NVIDIA to expand the capabilities of GPU acceleration. It allows developers to access the raw computing power of CUDA GPUs to process data faster than with traditional CPUs.We'll assume that you've done the first step and checked your software, and that whatever you use will support both options. If you have an Nvidia card, then use CUDA. It's considered faster than OpenCL much of the time. Note too that Nvidia cards do support OpenCL.

What makes CUDA so special : CUDA is designed to work with programming languages such as C, C++, Fortran and Python. This accessibility makes it easier for specialists in parallel programming to use GPU resources, in contrast to prior APIs like Direct3D and OpenGL, which required advanced skills in graphics programming.

Is RTX faster than CUDA

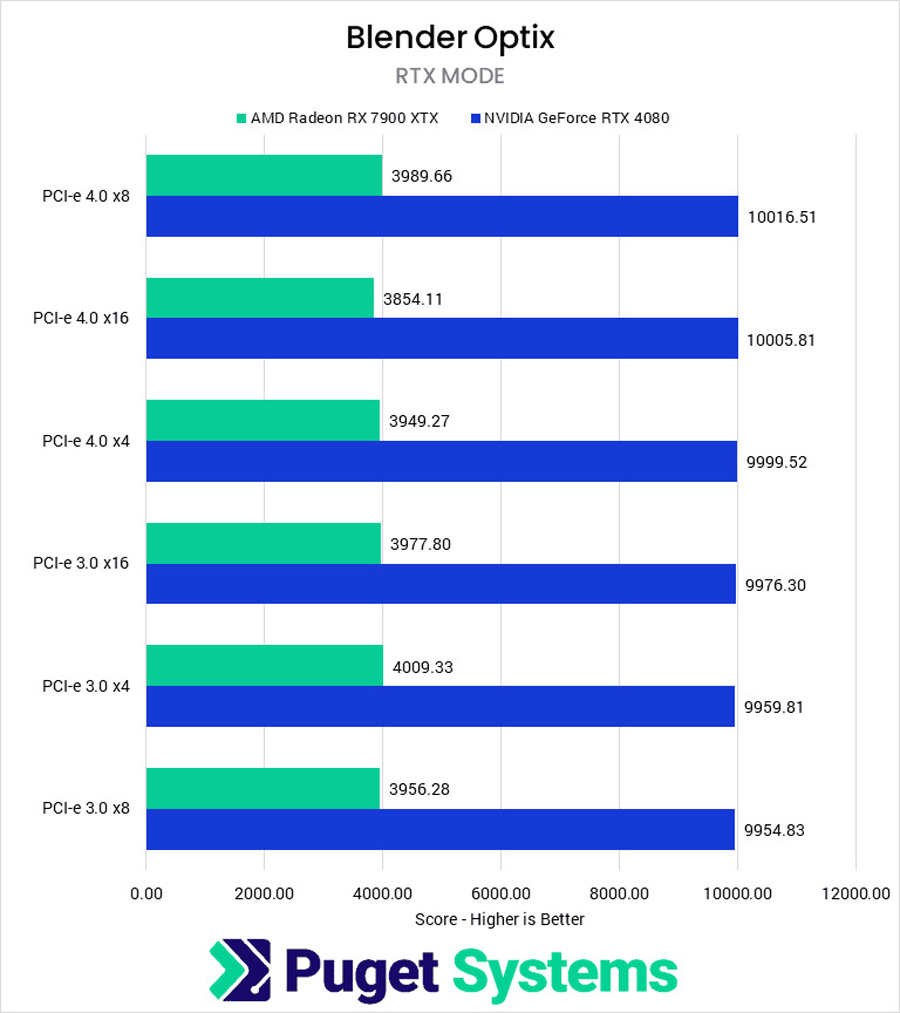

Cuda offers faster rendering times by utilizing the GPU's parallel processing capabilities. RTX technology provides real-time ray tracing and AI-enhanced rendering for more realistic and immersive results.

Can CUDA run on CPU : The CUDA code cannot run directly on the CPU but can be emulated. Threads are computed in parallel as part of a vectorized loop.

CUDA cores contribute to gaming performance by rendering graphics and processing game physics. Their parallel processing capabilities enable them to perform a large number of calculations simultaneously, leading to smoother and more realistic graphics and more immersive gaming experiences. Unmodified NVIDIA CUDA apps can now run on AMD GPUs thanks to ZLUDA.

Is CUDA a monopoly

NVIDIA Corporation : Silicon Valley wants to break Nvidia's CUDA software monopoly. Technology giant Nvidia, known for its cutting-edge artificial intelligence (AI) chips, finds itself at a crossroads.The rise of artificial intelligence products has skyrocketed the demand for AI chips and Nvidia holds 80% of the market share in this space. Its competitors AMD, Intel and Qualcomm, are lagging far behind in the competition. Therefore, as AI continues its upward trajectory, Nvidia remains the buzzword.NVIDIA GeForce RTX 3050 6GB to feature 2304 CUDA cores and 70W TDP. CUDA C is essentially C/C++ with a few extensions that allow one to execute functions on the GPU using many threads in parallel.

How powerful is 1 CUDA core : CUDA Cores and High-Performance Computing

Each CUDA core is capable of executing a single instruction at a time, but when combined in the thousands, as they are in modern GPUs, they can process large data sets in parallel, significantly reducing computation time.

How many CUDA cores are 4090 : 16,384 CUDA cores

NVIDIA's Ada Lovelace architecture provides 16,384 CUDA cores to the NVIDIA 4090 GPU compared to the previous generation NVIDIA 3090 with 10,496 CUDA cores.

Is CUDA programming hard

CUDA has a complex memory hierarchy, and it's up to the coder to manage it manually; the compiler isn't much help (yet), and leaves it to the programmer to handle most of the low-level aspects of moving data around the machine. Nvidia has been investing in this software since the mid-2000s, and has long encouraged developers to use it to build and test AI applications. This has made CUDA the de facto industry standard.Nvidia's moat is dependent on its CUDA software stack. Now behind in AI chips, Intel and a group of tech heavyweights are teaming up to disrupt CUDA's hold on the industry. Will the new open source software effort work We should find out by the end of the year.

What company has 95% of the market for graphic processors : Nvidia

Nvidia is the engine of the AI revolution

The company holds 98% market share in data center GPUs, over 95% market share in workstation graphics processors, and over 80% market share in AI chips, according to analysts.

Antwort Why is CUDA so fast? Weitere Antworten – Why is CUDA fast

CUDA is based on C and C++, and it allows us to accelerate GPU and their computing tasks by parallelizing them. This means we can divide a program into smaller tasks that can be executed independently on the GPU. This can significantly improve the performance of the program.The CUDA (Compute Unified Device Architecture) platform is a software framework developed by NVIDIA to expand the capabilities of GPU acceleration. It allows developers to access the raw computing power of CUDA GPUs to process data faster than with traditional CPUs.We'll assume that you've done the first step and checked your software, and that whatever you use will support both options. If you have an Nvidia card, then use CUDA. It's considered faster than OpenCL much of the time. Note too that Nvidia cards do support OpenCL.

What makes CUDA so special : CUDA is designed to work with programming languages such as C, C++, Fortran and Python. This accessibility makes it easier for specialists in parallel programming to use GPU resources, in contrast to prior APIs like Direct3D and OpenGL, which required advanced skills in graphics programming.

Is RTX faster than CUDA

Cuda offers faster rendering times by utilizing the GPU's parallel processing capabilities. RTX technology provides real-time ray tracing and AI-enhanced rendering for more realistic and immersive results.

Can CUDA run on CPU : The CUDA code cannot run directly on the CPU but can be emulated. Threads are computed in parallel as part of a vectorized loop.

CUDA cores contribute to gaming performance by rendering graphics and processing game physics. Their parallel processing capabilities enable them to perform a large number of calculations simultaneously, leading to smoother and more realistic graphics and more immersive gaming experiences.

Unmodified NVIDIA CUDA apps can now run on AMD GPUs thanks to ZLUDA.

Is CUDA a monopoly

NVIDIA Corporation : Silicon Valley wants to break Nvidia's CUDA software monopoly. Technology giant Nvidia, known for its cutting-edge artificial intelligence (AI) chips, finds itself at a crossroads.The rise of artificial intelligence products has skyrocketed the demand for AI chips and Nvidia holds 80% of the market share in this space. Its competitors AMD, Intel and Qualcomm, are lagging far behind in the competition. Therefore, as AI continues its upward trajectory, Nvidia remains the buzzword.NVIDIA GeForce RTX 3050 6GB to feature 2304 CUDA cores and 70W TDP.

CUDA C is essentially C/C++ with a few extensions that allow one to execute functions on the GPU using many threads in parallel.

How powerful is 1 CUDA core : CUDA Cores and High-Performance Computing

Each CUDA core is capable of executing a single instruction at a time, but when combined in the thousands, as they are in modern GPUs, they can process large data sets in parallel, significantly reducing computation time.

How many CUDA cores are 4090 : 16,384 CUDA cores

NVIDIA's Ada Lovelace architecture provides 16,384 CUDA cores to the NVIDIA 4090 GPU compared to the previous generation NVIDIA 3090 with 10,496 CUDA cores.

Is CUDA programming hard

CUDA has a complex memory hierarchy, and it's up to the coder to manage it manually; the compiler isn't much help (yet), and leaves it to the programmer to handle most of the low-level aspects of moving data around the machine.

Nvidia has been investing in this software since the mid-2000s, and has long encouraged developers to use it to build and test AI applications. This has made CUDA the de facto industry standard.Nvidia's moat is dependent on its CUDA software stack. Now behind in AI chips, Intel and a group of tech heavyweights are teaming up to disrupt CUDA's hold on the industry. Will the new open source software effort work We should find out by the end of the year.

What company has 95% of the market for graphic processors : Nvidia

Nvidia is the engine of the AI revolution

The company holds 98% market share in data center GPUs, over 95% market share in workstation graphics processors, and over 80% market share in AI chips, according to analysts.