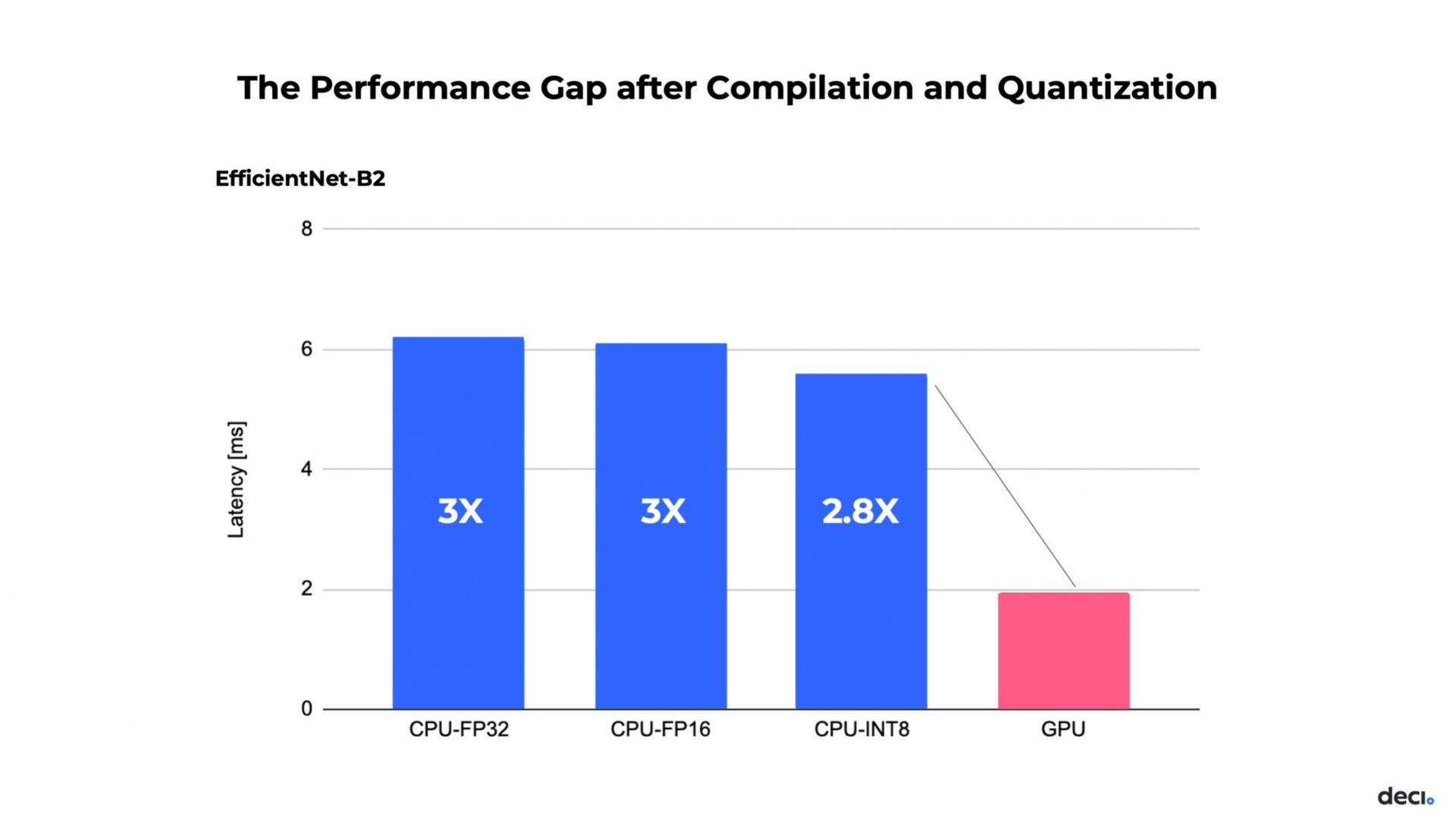

The net result is GPUs perform technical calculations faster and with greater energy efficiency than CPUs. That means they deliver leading performance for AI training and inference as well as gains across a wide array of applications that use accelerated computing.While AI relies primarily on programming algorithms that emulate human thinking, hardware is an equally important part of the equation. The three main hardware solutions for AI operations are field programmable gate arrays (FPGAs), graphics processing units (GPUs) and central processing units (CPUs).Therefore, choosing between CPU and GPU for deep learning depends on your specific needs and budget. If you're working with smaller models or have limited resources, a CPU might be sufficient. For large-scale projects requiring fast training and inference, a GPU is likely the better choice.

Why can’t GPU replace CPU : While GPUs can process data several orders of magnitude faster than a CPU due to massive parallelism, GPUs are not as versatile as CPUs. CPUs have large and broad instruction sets, managing every input and output of a computer, which a GPU cannot do.

Can AI run without GPU

AI generally requires a lot of matrix calculations. GPU are hardware specialized in doing those. Yes, a CPU can do them too, but they are much slower.

Why is GPU preferred over CPU : A graphics processing unit (GPU) is a similar hardware component but more specialized. It can more efficiently handle complex mathematical operations that run in parallel than a general CPU.

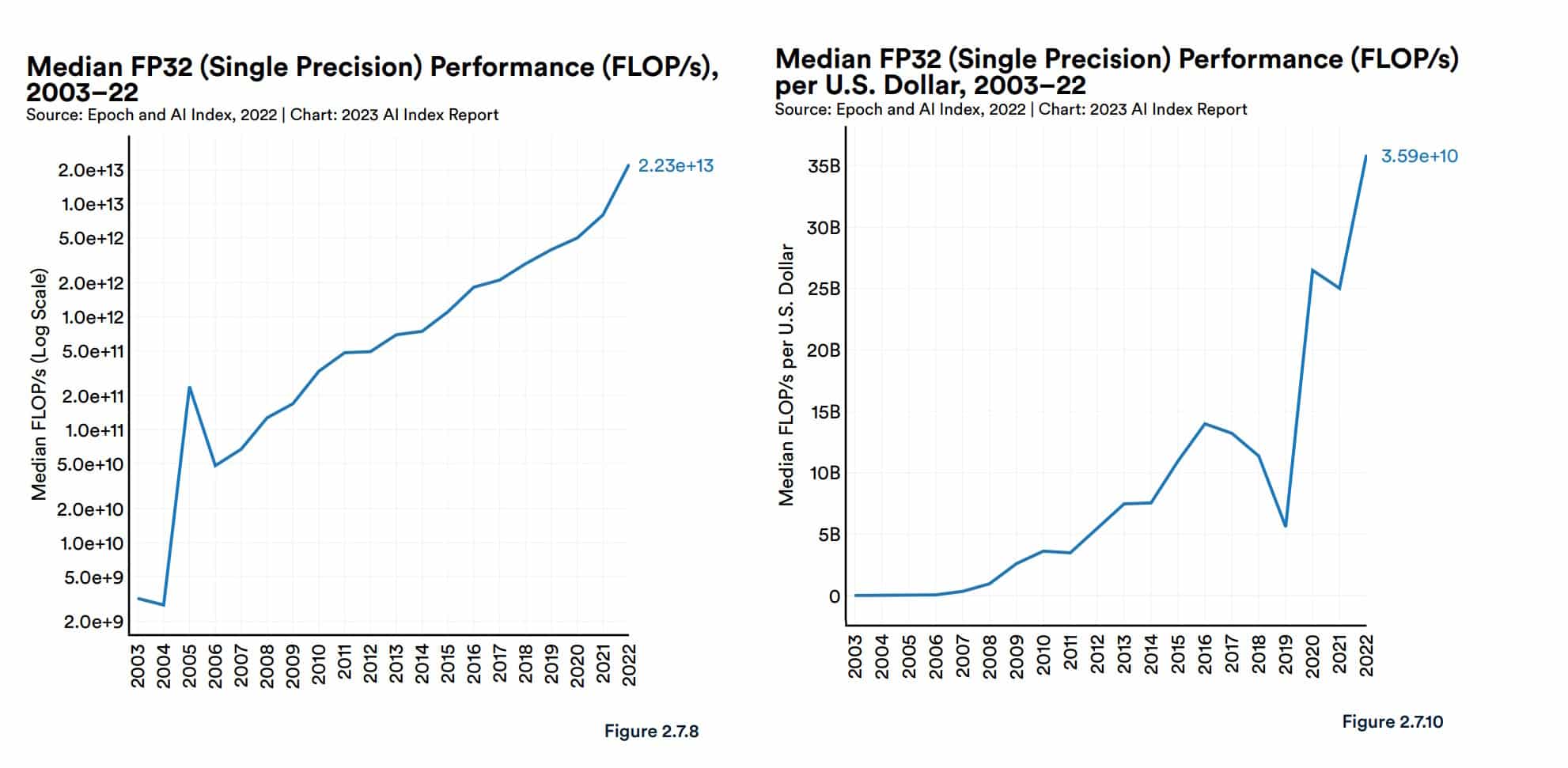

AI Growth Powered By GPU Advances

The ideal hardware for the heavy work of AI systems are graphical processing units, or GPUs. These specialized, superfast processors make parallel processing very fast and powerful. As a general rule, GPUs are a safer bet for fast machine learning because, at its heart, data science model training consists of simple matrix math calculations, the speed of which may be greatly enhanced if the computations are carried out in parallel.

Does AI inference need GPU

In practice, the choice between CPU-based and GPU-based AI inference depends on the specific requirements of the application. Some AI workloads benefit more from the parallel processing capabilities of GPUs, while others may prioritize low latency and versatile processing, which CPUs can provide.The CPU handles all the tasks required for all software on the server to run correctly. A GPU, on the other hand, supports the CPU to perform concurrent calculations. A GPU can complete simple and repetitive tasks much faster because it can break the task down into smaller components and finish them in parallel.While still important, CPUs focus more on behind-the-scenes sequential logic and physics; their impact on performance is less pronounced than the GPUs, which take on the crucial role of handling visual quality and resolution, making powerful GPUs more essential for demanding modern games. Nvidia's other strength is CUDA, a software platform that allows customers to fine tune the performance of its processors. Nvidia has been investing in this software since the mid-2000s, and has long encouraged developers to use it to build and test AI applications. This has made CUDA the de facto industry standard.

Why does AI use Nvidia : The type of computing GPUs handle is thus more efficient and more valuable than CPUs. Programmers have learned that Nvidia's GPUs are much better suited for programming AI software.

Why Nvidia chips for AI : Once Nvidia realised that its accelerators were highly efficient at training AI models, it focused on optimising them for that market. Its chips have kept pace with ever more complex AI models: in the decade to 2023 Nvidia increased the speed of its computations 1,000-fold.

Why does AI training use GPU

While the GPU handles more difficult mathematical and geometric computations. This means GPU can provide superior performance for AI training and inference while also benefiting from a wide range of accelerated computing workloads. The efficiency of GPUs in performing parallel computations drastically reduces the time required for training and inference in AI models. This speed is crucial for applications requiring real-time processing and decision-making, such as autonomous vehicles and real-time language translation.Memory-bound problems: GPUs generally have less memory available compared to CPUs, and their memory bandwidth can be a limiting factor. If a problem requires a large amount of memory or involves memory-intensive operations, it may not be well-suited for a GPU.

Why is GPU more efficient than CPU : The CPU handles all the tasks required for all software on the server to run correctly. A GPU, on the other hand, supports the CPU to perform concurrent calculations. A GPU can complete simple and repetitive tasks much faster because it can break the task down into smaller components and finish them in parallel.

Antwort Why AI use GPU instead of CPU? Weitere Antworten – Why are GPUs better for AI than CPU

The net result is GPUs perform technical calculations faster and with greater energy efficiency than CPUs. That means they deliver leading performance for AI training and inference as well as gains across a wide array of applications that use accelerated computing.While AI relies primarily on programming algorithms that emulate human thinking, hardware is an equally important part of the equation. The three main hardware solutions for AI operations are field programmable gate arrays (FPGAs), graphics processing units (GPUs) and central processing units (CPUs).Therefore, choosing between CPU and GPU for deep learning depends on your specific needs and budget. If you're working with smaller models or have limited resources, a CPU might be sufficient. For large-scale projects requiring fast training and inference, a GPU is likely the better choice.

Why can’t GPU replace CPU : While GPUs can process data several orders of magnitude faster than a CPU due to massive parallelism, GPUs are not as versatile as CPUs. CPUs have large and broad instruction sets, managing every input and output of a computer, which a GPU cannot do.

Can AI run without GPU

AI generally requires a lot of matrix calculations. GPU are hardware specialized in doing those. Yes, a CPU can do them too, but they are much slower.

Why is GPU preferred over CPU : A graphics processing unit (GPU) is a similar hardware component but more specialized. It can more efficiently handle complex mathematical operations that run in parallel than a general CPU.

AI Growth Powered By GPU Advances

The ideal hardware for the heavy work of AI systems are graphical processing units, or GPUs. These specialized, superfast processors make parallel processing very fast and powerful.

As a general rule, GPUs are a safer bet for fast machine learning because, at its heart, data science model training consists of simple matrix math calculations, the speed of which may be greatly enhanced if the computations are carried out in parallel.

Does AI inference need GPU

In practice, the choice between CPU-based and GPU-based AI inference depends on the specific requirements of the application. Some AI workloads benefit more from the parallel processing capabilities of GPUs, while others may prioritize low latency and versatile processing, which CPUs can provide.The CPU handles all the tasks required for all software on the server to run correctly. A GPU, on the other hand, supports the CPU to perform concurrent calculations. A GPU can complete simple and repetitive tasks much faster because it can break the task down into smaller components and finish them in parallel.While still important, CPUs focus more on behind-the-scenes sequential logic and physics; their impact on performance is less pronounced than the GPUs, which take on the crucial role of handling visual quality and resolution, making powerful GPUs more essential for demanding modern games.

Nvidia's other strength is CUDA, a software platform that allows customers to fine tune the performance of its processors. Nvidia has been investing in this software since the mid-2000s, and has long encouraged developers to use it to build and test AI applications. This has made CUDA the de facto industry standard.

Why does AI use Nvidia : The type of computing GPUs handle is thus more efficient and more valuable than CPUs. Programmers have learned that Nvidia's GPUs are much better suited for programming AI software.

Why Nvidia chips for AI : Once Nvidia realised that its accelerators were highly efficient at training AI models, it focused on optimising them for that market. Its chips have kept pace with ever more complex AI models: in the decade to 2023 Nvidia increased the speed of its computations 1,000-fold.

Why does AI training use GPU

While the GPU handles more difficult mathematical and geometric computations. This means GPU can provide superior performance for AI training and inference while also benefiting from a wide range of accelerated computing workloads.

The efficiency of GPUs in performing parallel computations drastically reduces the time required for training and inference in AI models. This speed is crucial for applications requiring real-time processing and decision-making, such as autonomous vehicles and real-time language translation.Memory-bound problems: GPUs generally have less memory available compared to CPUs, and their memory bandwidth can be a limiting factor. If a problem requires a large amount of memory or involves memory-intensive operations, it may not be well-suited for a GPU.

Why is GPU more efficient than CPU : The CPU handles all the tasks required for all software on the server to run correctly. A GPU, on the other hand, supports the CPU to perform concurrent calculations. A GPU can complete simple and repetitive tasks much faster because it can break the task down into smaller components and finish them in parallel.