Explainable artificial intelligence (XAI) is a set of processes and methods that allows human users to comprehend and trust the results and output created by machine learning algorithms. Explainable AI is used to describe an AI model, its expected impact and potential biases.X.AI Corp., doing business as xAI, is an American startup company working in the area of artificial intelligence (AI). Founded by Elon Musk in March 2023, its stated goal is "to understand the true nature of the universe".For example, hospitals can use explainable AI for cancer detection and treatment, where algorithms show the reasoning behind a given model's decision-making. This makes it easier not only for doctors to make treatment decisions, but also provide data-backed explanations to their patients.

Why do we need an explainable AI : So you can see why the ability to detail AI explainability methods is critical. Being able to interpret a machine-learning model increases trust in the model, which is essential in scenarios such as those that affect many financial, health care, and life and death decisions.

Is XAI deep learning

Machine learning models called XAI (explainable artificial intelligence) models are intended to be easier to explainable and interpretable.

Is ChatGPT explainable AI : ChatGPT is the antithesis of XAI (explainable AI), it is not a tool that should be used in situations where trust and explainability are critical requirements.

The overall goal of XAI is to advance human understanding of artificial systems in order to satisfy a given desideratum. Musk incorporated xAI in Nevada in March this year and reportedly purchased “roughly 10,000 graphics processing units”—hardware that is required to develop and run state-of-the-art AI systems.

What is an example of a XAI

Explainable AI (“XAI” for short) is AI that humans can understand. To illustrate why explainability matters, take as a simple example an AI system used by a bank to vet mortgage applications. A simple example of an AI use case: an AI model decides which mortgages to approve.When Elon Musk created his artificial-intelligence startup xAI last year, he said its researchers would work on existential problems like understanding the nature of the universe. Musk is also using xAI to pursue a more worldly goal: joining forces with his social-media company X.The overall goal of XAI is to advance human understanding of artificial systems in order to satisfy a given desideratum. Roko's basilisk is a thought experiment which states that an otherwise benevolent artificial superintelligence (AI) in the future would be incentivized to create a virtual reality simulation to torture anyone who knew of its potential existence but did not directly contribute to its advancement or development, in order …

Is ChatGPT XAI : ChatGPT is the antithesis of XAI (explainable AI), it is not a tool that should be used in situations where trust and explainability are critical requirements.

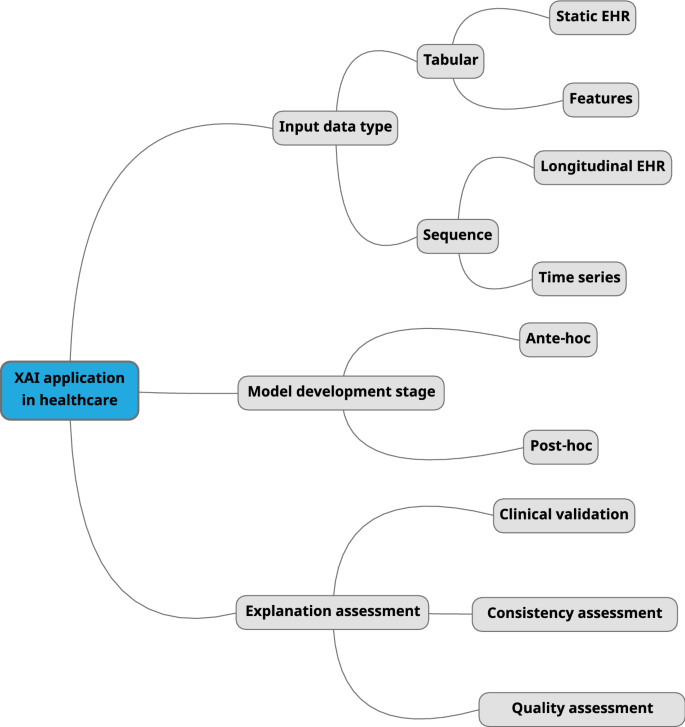

What is the ultimate goal of explainable AI XAI should be : Accordingly, XAI refers to an entity that attempts to make its inner working transparent to the end-user by providing sufficient details. XAI pursues several goals, including transparency, causality, privacy, fairness, trust, usability, and reliability ( Figure 1).

Who owns Chat GPT

OpenAI LP

Chat GPT is owned by OpenAI LP, an artificial intelligence research lab consisting of the for-profit OpenAI LP and its parent company, the non-profit OpenAI Inc. xAI has been organized in Nevada as a for-profit benefit corporation, a structure that allows the company to prioritize having a positive impact on society over its obligations to shareholders, according to a late November filing with Nevada.Elon Musk, CEO of Tesla and SpaceX, and owner of Twitter, on Wednesday announced the debut of a new AI company, xAI, with the goal to "understand the true nature of the universe."

Is Elon Musk scared of AI : Elon Musk cares about A.I. because he believes it's likely outside of human control… “A.I. isn't necessarily bad it's just definitely going to be outside of human control.” And since he believes we can't stop it, he therefore concludes we should merge with it…

Antwort What is xAI used for? Weitere Antworten – What is the use of XAI

Explainable artificial intelligence (XAI) is a set of processes and methods that allows human users to comprehend and trust the results and output created by machine learning algorithms. Explainable AI is used to describe an AI model, its expected impact and potential biases.X.AI Corp., doing business as xAI, is an American startup company working in the area of artificial intelligence (AI). Founded by Elon Musk in March 2023, its stated goal is "to understand the true nature of the universe".For example, hospitals can use explainable AI for cancer detection and treatment, where algorithms show the reasoning behind a given model's decision-making. This makes it easier not only for doctors to make treatment decisions, but also provide data-backed explanations to their patients.

Why do we need an explainable AI : So you can see why the ability to detail AI explainability methods is critical. Being able to interpret a machine-learning model increases trust in the model, which is essential in scenarios such as those that affect many financial, health care, and life and death decisions.

Is XAI deep learning

Machine learning models called XAI (explainable artificial intelligence) models are intended to be easier to explainable and interpretable.

Is ChatGPT explainable AI : ChatGPT is the antithesis of XAI (explainable AI), it is not a tool that should be used in situations where trust and explainability are critical requirements.

The overall goal of XAI is to advance human understanding of artificial systems in order to satisfy a given desideratum.

Musk incorporated xAI in Nevada in March this year and reportedly purchased “roughly 10,000 graphics processing units”—hardware that is required to develop and run state-of-the-art AI systems.

What is an example of a XAI

Explainable AI (“XAI” for short) is AI that humans can understand. To illustrate why explainability matters, take as a simple example an AI system used by a bank to vet mortgage applications. A simple example of an AI use case: an AI model decides which mortgages to approve.When Elon Musk created his artificial-intelligence startup xAI last year, he said its researchers would work on existential problems like understanding the nature of the universe. Musk is also using xAI to pursue a more worldly goal: joining forces with his social-media company X.The overall goal of XAI is to advance human understanding of artificial systems in order to satisfy a given desideratum.

Roko's basilisk is a thought experiment which states that an otherwise benevolent artificial superintelligence (AI) in the future would be incentivized to create a virtual reality simulation to torture anyone who knew of its potential existence but did not directly contribute to its advancement or development, in order …

Is ChatGPT XAI : ChatGPT is the antithesis of XAI (explainable AI), it is not a tool that should be used in situations where trust and explainability are critical requirements.

What is the ultimate goal of explainable AI XAI should be : Accordingly, XAI refers to an entity that attempts to make its inner working transparent to the end-user by providing sufficient details. XAI pursues several goals, including transparency, causality, privacy, fairness, trust, usability, and reliability ( Figure 1).

Who owns Chat GPT

OpenAI LP

Chat GPT is owned by OpenAI LP, an artificial intelligence research lab consisting of the for-profit OpenAI LP and its parent company, the non-profit OpenAI Inc.

xAI has been organized in Nevada as a for-profit benefit corporation, a structure that allows the company to prioritize having a positive impact on society over its obligations to shareholders, according to a late November filing with Nevada.Elon Musk, CEO of Tesla and SpaceX, and owner of Twitter, on Wednesday announced the debut of a new AI company, xAI, with the goal to "understand the true nature of the universe."

Is Elon Musk scared of AI : Elon Musk cares about A.I. because he believes it's likely outside of human control… “A.I. isn't necessarily bad it's just definitely going to be outside of human control.” And since he believes we can't stop it, he therefore concludes we should merge with it…