The main difference between a CPU and a CUDA GPU is that the CPU is designed to handle a single task simultaneously. In contrast, a CUDA GPU is designed to handle numerous tasks simultaneously. CUDA GPUs use a parallel computing model, meaning many calculations co-occur instead of executing in sequence.Compute Unified Device Architecture (CUDA) is a proprietary parallel computing platform and application programming interface (API) that allows software to use certain types of graphics processing units (GPUs) for accelerated general-purpose processing, an approach called general-purpose computing on GPUs (GPGPU).CUDA cores, with their parallel processing capabilities, play a significant role in these fields. Machine learning algorithms, particularly deep learning algorithms, involve performing a large number of matrix multiplications. These operations can be parallelized and executed efficiently on CUDA cores.

What is the difference between a CPU and a GPU : The CPU handles all the tasks required for all software on the server to run correctly. A GPU, on the other hand, supports the CPU to perform concurrent calculations. A GPU can complete simple and repetitive tasks much faster because it can break the task down into smaller components and finish them in parallel.

Is CUDA faster than CPU

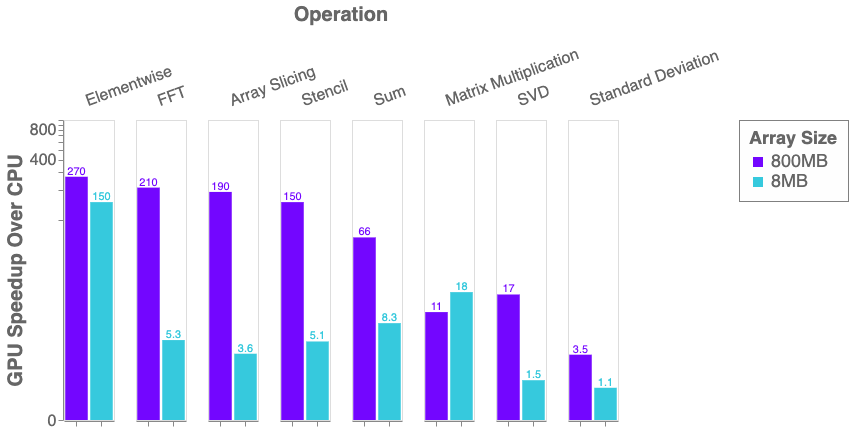

It is interesting to note that it is faster to perform the CPU task for small matrixes. Where for larger arrays, the CUDA outperforms the CPU by large margins. On a large scale, it looks like the CUDA times are not increasing, but if we only plot the CUDA times, we can see that it also increases linearly.

How fast is CUDA compared to CPU : Besides, considering the operations in CUDA are asynchronous, I also need to add a synchronization statement to ensure printing the used time after all CUDA tasks are done. Almost 42x times faster than running in CPU. A model that needs several days' training in CPU may now take only a few hours in GPU.

Because you are not measuring the actual kernel time to transfer the data but “something else” due to the mentioned async execution. If you don't synchronize the code, the CPU will just run ahead and is able to start and stop the timer while the data is still being transferred. GPU cores are slower than CPUs for serial computing but much faster for parallel computing as they have thousands of weaker cores that are best suited for parallel workloads. GPU cores are specialized processors for handling graphics manipulations.

Is it better to run on CPU or GPU

While still important, CPUs focus more on behind-the-scenes sequential logic and physics; their impact on performance is less pronounced than the GPUs, which take on the crucial role of handling visual quality and resolution, making powerful GPUs more essential for demanding modern games.CUDA is based on C and C++, and it allows us to accelerate GPU and their computing tasks by parallelizing them. This means we can divide a program into smaller tasks that can be executed independently on the GPU. This can significantly improve the performance of the program.The Edge of GPUs in Deep Learning Inference

Here's why GPUs often outperform CPUs in this arena: Parallel processing capabilities: GPUs are uniquely equipped with thousands of cores, enabling them to excel at parallel processing. GPUs mainly enhance images and render graphics significantly faster than CPUs. Combining GPUs with high-end computer components can render graphics up to 100 times faster than CPUs. Despite their high speeds, GPUs are usually designed to perform simple and non-complex tasks.

Is RTX faster than CUDA : Cuda offers faster rendering times by utilizing the GPU's parallel processing capabilities. RTX technology provides real-time ray tracing and AI-enhanced rendering for more realistic and immersive results.

Why do AI use GPU instead of CPU : A GPU contains hundreds or thousands of cores, allowing for parallel computing and lightning-fast graphics output. The GPUs also include more transistors than CPUs. Because of its faster clock speed and fewer cores, the CPU is more suited to tackling daily single-threaded tasks than AI workloads.

Why use CPU if GPU is faster

Memory-Intensive Tasks

There are tasks where the memory access time is a bottleneck, not computations themselves. CPUs usually have larger cache sizes (fast memory access element) than GPUs and have faster memory subsystems which allow them to excel at manipulating frequently accessed data. The net result is GPUs perform technical calculations faster and with greater energy efficiency than CPUs. That means they deliver leading performance for AI training and inference as well as gains across a wide array of applications that use accelerated computing.As resolution increases, the number of pixels on your screen goes up — and so does the strain on your GPU. Lowering your game's resolution can improve FPS by making your GPU's job easier since it won't have to support as many pixels with each frame.

Is CUDA or CPU faster : It is interesting to note that it is faster to perform the CPU task for small matrixes. Where for larger arrays, the CUDA outperforms the CPU by large margins. On a large scale, it looks like the CUDA times are not increasing, but if we only plot the CUDA times, we can see that it also increases linearly.

Antwort Is the CUDA code slower than the CPU? Weitere Antworten – What is the difference between CPU and CUDA

The main difference between a CPU and a CUDA GPU is that the CPU is designed to handle a single task simultaneously. In contrast, a CUDA GPU is designed to handle numerous tasks simultaneously. CUDA GPUs use a parallel computing model, meaning many calculations co-occur instead of executing in sequence.Compute Unified Device Architecture (CUDA) is a proprietary parallel computing platform and application programming interface (API) that allows software to use certain types of graphics processing units (GPUs) for accelerated general-purpose processing, an approach called general-purpose computing on GPUs (GPGPU).CUDA cores, with their parallel processing capabilities, play a significant role in these fields. Machine learning algorithms, particularly deep learning algorithms, involve performing a large number of matrix multiplications. These operations can be parallelized and executed efficiently on CUDA cores.

What is the difference between a CPU and a GPU : The CPU handles all the tasks required for all software on the server to run correctly. A GPU, on the other hand, supports the CPU to perform concurrent calculations. A GPU can complete simple and repetitive tasks much faster because it can break the task down into smaller components and finish them in parallel.

Is CUDA faster than CPU

It is interesting to note that it is faster to perform the CPU task for small matrixes. Where for larger arrays, the CUDA outperforms the CPU by large margins. On a large scale, it looks like the CUDA times are not increasing, but if we only plot the CUDA times, we can see that it also increases linearly.

How fast is CUDA compared to CPU : Besides, considering the operations in CUDA are asynchronous, I also need to add a synchronization statement to ensure printing the used time after all CUDA tasks are done. Almost 42x times faster than running in CPU. A model that needs several days' training in CPU may now take only a few hours in GPU.

Because you are not measuring the actual kernel time to transfer the data but “something else” due to the mentioned async execution. If you don't synchronize the code, the CPU will just run ahead and is able to start and stop the timer while the data is still being transferred.

GPU cores are slower than CPUs for serial computing but much faster for parallel computing as they have thousands of weaker cores that are best suited for parallel workloads. GPU cores are specialized processors for handling graphics manipulations.

Is it better to run on CPU or GPU

While still important, CPUs focus more on behind-the-scenes sequential logic and physics; their impact on performance is less pronounced than the GPUs, which take on the crucial role of handling visual quality and resolution, making powerful GPUs more essential for demanding modern games.CUDA is based on C and C++, and it allows us to accelerate GPU and their computing tasks by parallelizing them. This means we can divide a program into smaller tasks that can be executed independently on the GPU. This can significantly improve the performance of the program.The Edge of GPUs in Deep Learning Inference

Here's why GPUs often outperform CPUs in this arena: Parallel processing capabilities: GPUs are uniquely equipped with thousands of cores, enabling them to excel at parallel processing.

GPUs mainly enhance images and render graphics significantly faster than CPUs. Combining GPUs with high-end computer components can render graphics up to 100 times faster than CPUs. Despite their high speeds, GPUs are usually designed to perform simple and non-complex tasks.

Is RTX faster than CUDA : Cuda offers faster rendering times by utilizing the GPU's parallel processing capabilities. RTX technology provides real-time ray tracing and AI-enhanced rendering for more realistic and immersive results.

Why do AI use GPU instead of CPU : A GPU contains hundreds or thousands of cores, allowing for parallel computing and lightning-fast graphics output. The GPUs also include more transistors than CPUs. Because of its faster clock speed and fewer cores, the CPU is more suited to tackling daily single-threaded tasks than AI workloads.

Why use CPU if GPU is faster

Memory-Intensive Tasks

There are tasks where the memory access time is a bottleneck, not computations themselves. CPUs usually have larger cache sizes (fast memory access element) than GPUs and have faster memory subsystems which allow them to excel at manipulating frequently accessed data.

The net result is GPUs perform technical calculations faster and with greater energy efficiency than CPUs. That means they deliver leading performance for AI training and inference as well as gains across a wide array of applications that use accelerated computing.As resolution increases, the number of pixels on your screen goes up — and so does the strain on your GPU. Lowering your game's resolution can improve FPS by making your GPU's job easier since it won't have to support as many pixels with each frame.

Is CUDA or CPU faster : It is interesting to note that it is faster to perform the CPU task for small matrixes. Where for larger arrays, the CUDA outperforms the CPU by large margins. On a large scale, it looks like the CUDA times are not increasing, but if we only plot the CUDA times, we can see that it also increases linearly.