Here's why GPUs often outperform CPUs in this arena: Parallel processing capabilities: GPUs are uniquely equipped with thousands of cores, enabling them to excel at parallel processing.A GPU contains hundreds or thousands of cores, allowing for parallel computing and lightning-fast graphics output. The GPUs also include more transistors than CPUs. Because of its faster clock speed and fewer cores, the CPU is more suited to tackling daily single-threaded tasks than AI workloads.The CPU handles all the tasks required for all software on the server to run correctly. A GPU, on the other hand, supports the CPU to perform concurrent calculations. A GPU can complete simple and repetitive tasks much faster because it can break the task down into smaller components and finish them in parallel.

How much faster is a GPU for deep learning : 3-10 times faster

Overall speedup: Typically, GPUs can be 3-10 times faster than CPUs for deep learning tasks. Some sources mention even larger speedups, like 200-250 times, but these often refer to older CPUs or highly optimized GPU workloads.

Should I use GPU for machine learning

Do I need a GPU for machine learning Machine learning, a subset of AI, is the ability of computer systems to learn to make decisions and predictions from observations and data. A GPU is a specialized processing unit with enhanced mathematical computation capability, making it ideal for machine learning.

Why is GPU better for AI : The efficiency of GPUs in performing parallel computations drastically reduces the time required for training and inference in AI models. This speed is crucial for applications requiring real-time processing and decision-making, such as autonomous vehicles and real-time language translation.

Because they have thousands of cores, GPUs are optimized for training deep learning models and can process multiple parallel tasks up to three times faster than a CPU. In conclusion, several steps of the machine learning process require CPUs and GPUs. While GPUs are used to train big deep learning models, CPUs are beneficial for data preparation, feature extraction, and small-scale models. For inference and hyperparameter tweaking, CPUs and GPUs may both be utilized.

Why are GPUs better for AI

GPU architecture offers unmatched computational speed and efficiency, making it the backbone of many AI advancements. The foundational support of GPU architecture allows AI to tackle complex algorithms and vast datasets, accelerating the pace of innovation and enabling more sophisticated, real-time applications.As a general rule, GPUs are a safer bet for fast machine learning because, at its heart, data science model training consists of simple matrix math calculations, the speed of which may be greatly enhanced if the computations are carried out in parallel.This is because GPUs include dedicated video RAM (VRAM), enabling you to retain CPU memory for other tasks. Dataset size—GPUs in parallel can scale more easily than CPUs, enabling you to process massive datasets faster. While GTX GPUs can provide decent performance for certain data science tasks, RTX GPUs are better equipped to handle the demands of modern AI and deep learning workloads. Their enhanced compute capabilities, larger VRAM options, and improved compatibility make them the preferred choice for many data scientists.

Why are Nvidia GPUs so good for AI : A high-performance GPU can have more than a thousand cores, so it can handle thousands of calculations at the same time. Once Nvidia realised that its accelerators were highly efficient at training AI models, it focused on optimising them for that market.

Will Nvidia dominate AI : So, even if other big tech players continue their chip development efforts, Nvidia is likely to remain the top AI chip player for quite some time. Japanese investment bank Mizuho estimates that Nvidia could sell $280 billion worth of AI chips in 2027 as it projects the overall market hitting $400 billion.

Does ML need a GPU

While CPUs can process many general tasks in a fast, sequential manner, GPUs use parallel computing to break down massively complex problems into multiple smaller simultaneous calculations. This makes them ideal for handling the massively distributed computational processes required for machine learning. We recommend you to use an NVIDIA GPU since they are currently the best out there for a few reasons: Currently the fastest. Native Pytorch support for CUDA.Once Nvidia realised that its accelerators were highly efficient at training AI models, it focused on optimising them for that market. Its chips have kept pace with ever more complex AI models: in the decade to 2023 Nvidia increased the speed of its computations 1,000-fold.

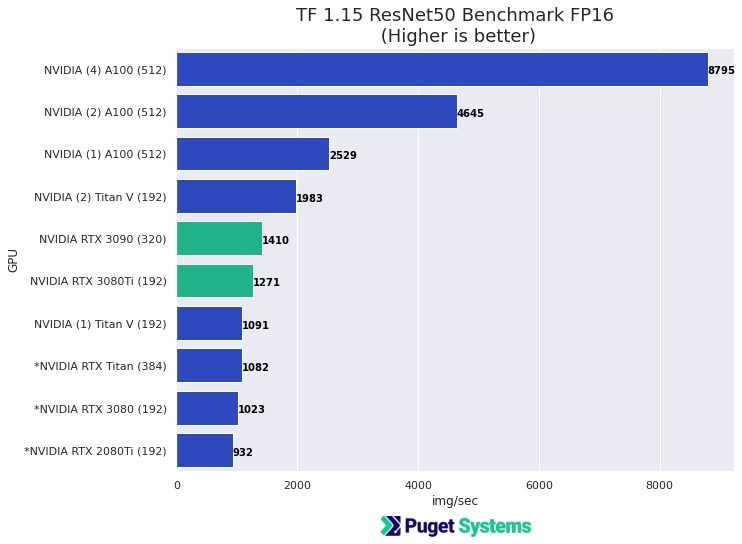

Which GPU is best for AI : 5 Best GPUs for AI and Deep Learning in 2024

Top 1. NVIDIA A100. The NVIDIA A100 is an excellent GPU for deep learning.

Top 2. NVIDIA RTX A6000. The NVIDIA RTX A6000 is a powerful GPU that is well-suited for deep learning applications.

Antwort Is GPU faster than CPU for machine learning? Weitere Antworten – Why is GPU better than CPU for machine learning

The Edge of GPUs in Deep Learning Inference

Here's why GPUs often outperform CPUs in this arena: Parallel processing capabilities: GPUs are uniquely equipped with thousands of cores, enabling them to excel at parallel processing.A GPU contains hundreds or thousands of cores, allowing for parallel computing and lightning-fast graphics output. The GPUs also include more transistors than CPUs. Because of its faster clock speed and fewer cores, the CPU is more suited to tackling daily single-threaded tasks than AI workloads.The CPU handles all the tasks required for all software on the server to run correctly. A GPU, on the other hand, supports the CPU to perform concurrent calculations. A GPU can complete simple and repetitive tasks much faster because it can break the task down into smaller components and finish them in parallel.

How much faster is a GPU for deep learning : 3-10 times faster

Overall speedup: Typically, GPUs can be 3-10 times faster than CPUs for deep learning tasks. Some sources mention even larger speedups, like 200-250 times, but these often refer to older CPUs or highly optimized GPU workloads.

Should I use GPU for machine learning

Do I need a GPU for machine learning Machine learning, a subset of AI, is the ability of computer systems to learn to make decisions and predictions from observations and data. A GPU is a specialized processing unit with enhanced mathematical computation capability, making it ideal for machine learning.

Why is GPU better for AI : The efficiency of GPUs in performing parallel computations drastically reduces the time required for training and inference in AI models. This speed is crucial for applications requiring real-time processing and decision-making, such as autonomous vehicles and real-time language translation.

Because they have thousands of cores, GPUs are optimized for training deep learning models and can process multiple parallel tasks up to three times faster than a CPU.

In conclusion, several steps of the machine learning process require CPUs and GPUs. While GPUs are used to train big deep learning models, CPUs are beneficial for data preparation, feature extraction, and small-scale models. For inference and hyperparameter tweaking, CPUs and GPUs may both be utilized.

Why are GPUs better for AI

GPU architecture offers unmatched computational speed and efficiency, making it the backbone of many AI advancements. The foundational support of GPU architecture allows AI to tackle complex algorithms and vast datasets, accelerating the pace of innovation and enabling more sophisticated, real-time applications.As a general rule, GPUs are a safer bet for fast machine learning because, at its heart, data science model training consists of simple matrix math calculations, the speed of which may be greatly enhanced if the computations are carried out in parallel.This is because GPUs include dedicated video RAM (VRAM), enabling you to retain CPU memory for other tasks. Dataset size—GPUs in parallel can scale more easily than CPUs, enabling you to process massive datasets faster.

While GTX GPUs can provide decent performance for certain data science tasks, RTX GPUs are better equipped to handle the demands of modern AI and deep learning workloads. Their enhanced compute capabilities, larger VRAM options, and improved compatibility make them the preferred choice for many data scientists.

Why are Nvidia GPUs so good for AI : A high-performance GPU can have more than a thousand cores, so it can handle thousands of calculations at the same time. Once Nvidia realised that its accelerators were highly efficient at training AI models, it focused on optimising them for that market.

Will Nvidia dominate AI : So, even if other big tech players continue their chip development efforts, Nvidia is likely to remain the top AI chip player for quite some time. Japanese investment bank Mizuho estimates that Nvidia could sell $280 billion worth of AI chips in 2027 as it projects the overall market hitting $400 billion.

Does ML need a GPU

While CPUs can process many general tasks in a fast, sequential manner, GPUs use parallel computing to break down massively complex problems into multiple smaller simultaneous calculations. This makes them ideal for handling the massively distributed computational processes required for machine learning.

We recommend you to use an NVIDIA GPU since they are currently the best out there for a few reasons: Currently the fastest. Native Pytorch support for CUDA.Once Nvidia realised that its accelerators were highly efficient at training AI models, it focused on optimising them for that market. Its chips have kept pace with ever more complex AI models: in the decade to 2023 Nvidia increased the speed of its computations 1,000-fold.

Which GPU is best for AI : 5 Best GPUs for AI and Deep Learning in 2024