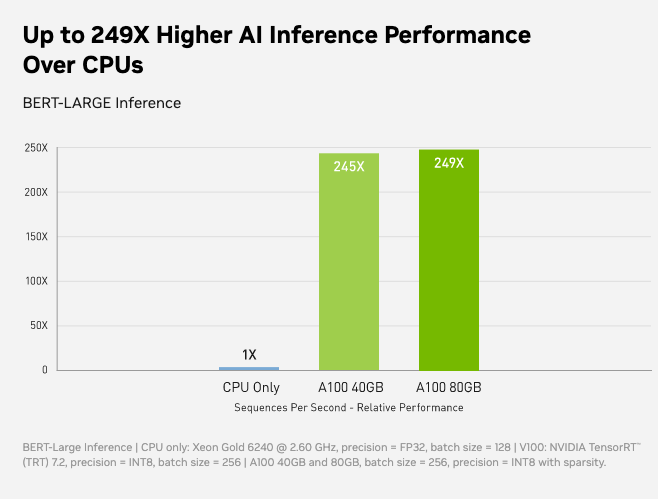

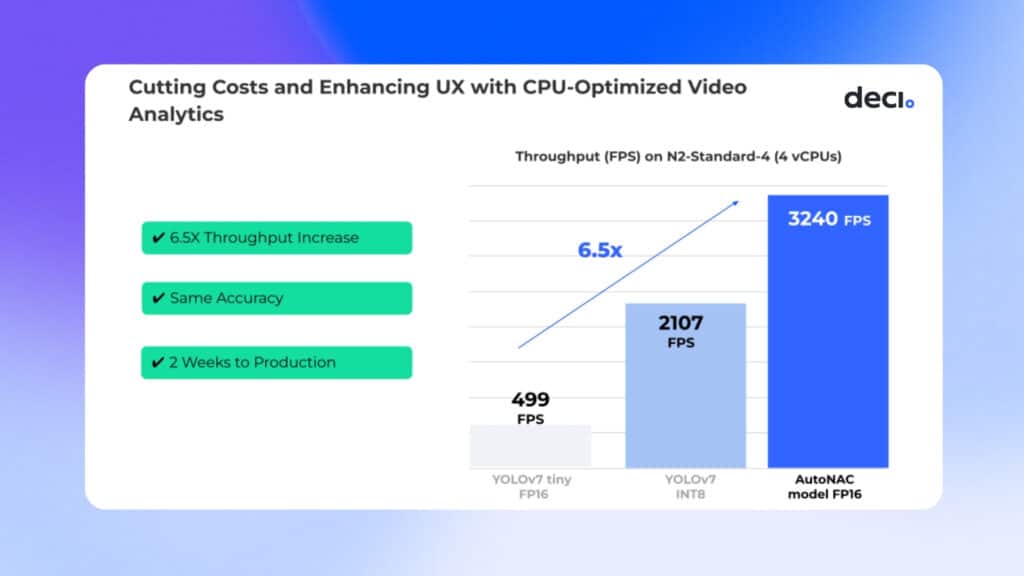

AI inference is the process of using a trained AI model to make predictions or decisions based on new data. While AI training is a compute-intensive task that benefits significantly from the parallel processing power of GPUs, inference tasks can often be run efficiently on CPUs, especially when optimised properly.AI chips are more suitable for tasks such as large-scale matrix calculations and neural network calculations, while GPU chips are more suitable for tasks such as general numerical calculations and graphics rendering.What CPU is best for machine learning & AI The two recommended CPU platforms are Intel Xeon W and AMD Threadripper Pro. This is because both of these offer excellent reliability, can supply the needed PCI-Express lanes for multiple video cards (GPUs), and offer excellent memory performance in CPU space.

Do you need both CPU and GPU : Today, it is no longer a question of CPU vs. GPU. More than ever, you need both to meet your varied computing demands. The best results are achieved when the right tool is used for the job.

Is AI powered by GPU

AI Growth Powered By GPU Advances

The ideal hardware for the heavy work of AI systems are graphical processing units, or GPUs. These specialized, superfast processors make parallel processing very fast and powerful.

Can AI run without GPU : AI generally requires a lot of matrix calculations. GPU are hardware specialized in doing those. Yes, a CPU can do them too, but they are much slower.

Why are GPUs so useful for AI It turns out GPUs can be repurposed to do more than generate graphical scenes. Many of the machine learning techniques behind artificial intelligence (AI), such as deep neural networks, rely heavily on various forms of “matrix multiplication”.

By batching instructions and pushing vast amounts of data at high volumes, they can speed up workloads beyond the capabilities of a CPU. In this way, GPUs provide massive acceleration for specialized tasks such as machine learning, data analytics, and other artificial intelligence (AI) applications.

Which GPU is best for AI

5 Best GPUs for AI and Deep Learning in 2024

Top 1. NVIDIA A100. The NVIDIA A100 is an excellent GPU for deep learning.

Top 2. NVIDIA RTX A6000. The NVIDIA RTX A6000 is a powerful GPU that is well-suited for deep learning applications.

Top 3. NVIDIA RTX 4090.

Top 4. NVIDIA A40.

Top 5. NVIDIA V100.

Both the GPU and CPU are important, but the GPU has a more significant impact in most cases. Modern games are graphically demanding, and the GPU handles all graphics rendering and processing needed to display modern games. In contrast, the CPU's role is on core processing tasks.Graphics processing units (GPUs) are specialized processing cores that you can use to speed computational processes. These cores were initially designed to process images and visual data. However, GPUs are now being adopted to enhance other computational processes, such as deep learning.

So, even if other big tech players continue their chip development efforts, Nvidia is likely to remain the top AI chip player for quite some time. Japanese investment bank Mizuho estimates that Nvidia could sell $280 billion worth of AI chips in 2027 as it projects the overall market hitting $400 billion.

Do I need a GPU for AI : GPUs play an important role in AI today, providing top performance for AI training and inference. They also offer significant benefits across a diverse array of applications that demand accelerated computing. There are three key functions of GPUs to achieve these outcomes.

Does OpenAI use GPUs : Many prominent AI companies, including OpenAI, have relied on Nvidia's GPUs to provide the immense computational power that's required to train large language models (LLMs). (OpenAI CEO Sam Altman has teased the idea of starting his own GPU manufacturing operation, citing scarcity concerns.)

Why does AI run on GPU

AI and ML models often require processing and analyzing large datasets. With their high-bandwidth memory and parallel architecture, GPUs are adept at managing these data-intensive tasks, leading to quicker insights and model training.

Medium Datasets and Moderate Models: When dealing with medium-sized datasets and more complex models like random forests or shallow neural networks, it is recommended to have 16GB to 32GB of RAM. This additional memory allows for efficient data processing and model training.GPUs play an important role in AI today, providing top performance for AI training and inference. They also offer significant benefits across a diverse array of applications that demand accelerated computing.

Is CPU faster than GPU : GPU cores are slower than CPUs for serial computing but much faster for parallel computing as they have thousands of weaker cores that are best suited for parallel workloads. GPU cores are specialized processors for handling graphics manipulations.

Antwort Is AI a CPU or GPU? Weitere Antworten – Does AI use CPU

AI inference is the process of using a trained AI model to make predictions or decisions based on new data. While AI training is a compute-intensive task that benefits significantly from the parallel processing power of GPUs, inference tasks can often be run efficiently on CPUs, especially when optimised properly.AI chips are more suitable for tasks such as large-scale matrix calculations and neural network calculations, while GPU chips are more suitable for tasks such as general numerical calculations and graphics rendering.What CPU is best for machine learning & AI The two recommended CPU platforms are Intel Xeon W and AMD Threadripper Pro. This is because both of these offer excellent reliability, can supply the needed PCI-Express lanes for multiple video cards (GPUs), and offer excellent memory performance in CPU space.

Do you need both CPU and GPU : Today, it is no longer a question of CPU vs. GPU. More than ever, you need both to meet your varied computing demands. The best results are achieved when the right tool is used for the job.

Is AI powered by GPU

AI Growth Powered By GPU Advances

The ideal hardware for the heavy work of AI systems are graphical processing units, or GPUs. These specialized, superfast processors make parallel processing very fast and powerful.

Can AI run without GPU : AI generally requires a lot of matrix calculations. GPU are hardware specialized in doing those. Yes, a CPU can do them too, but they are much slower.

Why are GPUs so useful for AI It turns out GPUs can be repurposed to do more than generate graphical scenes. Many of the machine learning techniques behind artificial intelligence (AI), such as deep neural networks, rely heavily on various forms of “matrix multiplication”.

By batching instructions and pushing vast amounts of data at high volumes, they can speed up workloads beyond the capabilities of a CPU. In this way, GPUs provide massive acceleration for specialized tasks such as machine learning, data analytics, and other artificial intelligence (AI) applications.

Which GPU is best for AI

5 Best GPUs for AI and Deep Learning in 2024

Both the GPU and CPU are important, but the GPU has a more significant impact in most cases. Modern games are graphically demanding, and the GPU handles all graphics rendering and processing needed to display modern games. In contrast, the CPU's role is on core processing tasks.Graphics processing units (GPUs) are specialized processing cores that you can use to speed computational processes. These cores were initially designed to process images and visual data. However, GPUs are now being adopted to enhance other computational processes, such as deep learning.

So, even if other big tech players continue their chip development efforts, Nvidia is likely to remain the top AI chip player for quite some time. Japanese investment bank Mizuho estimates that Nvidia could sell $280 billion worth of AI chips in 2027 as it projects the overall market hitting $400 billion.

Do I need a GPU for AI : GPUs play an important role in AI today, providing top performance for AI training and inference. They also offer significant benefits across a diverse array of applications that demand accelerated computing. There are three key functions of GPUs to achieve these outcomes.

Does OpenAI use GPUs : Many prominent AI companies, including OpenAI, have relied on Nvidia's GPUs to provide the immense computational power that's required to train large language models (LLMs). (OpenAI CEO Sam Altman has teased the idea of starting his own GPU manufacturing operation, citing scarcity concerns.)

Why does AI run on GPU

AI and ML models often require processing and analyzing large datasets. With their high-bandwidth memory and parallel architecture, GPUs are adept at managing these data-intensive tasks, leading to quicker insights and model training.

Medium Datasets and Moderate Models: When dealing with medium-sized datasets and more complex models like random forests or shallow neural networks, it is recommended to have 16GB to 32GB of RAM. This additional memory allows for efficient data processing and model training.GPUs play an important role in AI today, providing top performance for AI training and inference. They also offer significant benefits across a diverse array of applications that demand accelerated computing.

Is CPU faster than GPU : GPU cores are slower than CPUs for serial computing but much faster for parallel computing as they have thousands of weaker cores that are best suited for parallel workloads. GPU cores are specialized processors for handling graphics manipulations.