NVIDIA's Ada Lovelace architecture provides 16,384 CUDA cores to the NVIDIA 4090 GPU compared to the previous generation NVIDIA 3090 with 10,496 CUDA cores.Though less powerful than a CPU core, CUDA cores' strength lies in their numbers. Most advanced GPUs can have hundreds and even thousands of CUDA cores which can simultaneously undertake calculations on different data sets in parallel.The CUDA (Compute Unified Device Architecture) platform is a software framework developed by NVIDIA to expand the capabilities of GPU acceleration. It allows developers to access the raw computing power of CUDA GPUs to process data faster than with traditional CPUs.

What is the highest CUDA core : While a CPU has a few hundred cores at most, a high-end GPU can have as many as thousands of CUDA cores. Each new generation of NVIDIA GPUs comes with more powerful cores. The company's latest flagship GPU, the GeForce RTX 4090, has 16,384 CUDA cores. That's an increase of nearly 40% over the previous generation.

Is the RTX 4090 overkill

The RTX 4090 is impressive, but most people will be better off getting an RTX 4080 Super or a high-end, 30-series graphics card if they can find one. The $999 RTX 4080 Super and $800 RTX 4070 Ti Super will both comfortably outperform a last-gen RTX 3090 Ti.

Is 32GB RAM enough for 4090 : You want at least 32GB of DDR5 for your RTX 4090. If you're running an AMD processor that supports EXPO profiles, make sure you pick up a kit with the correct profile timings.

Cuda offers faster rendering times by utilizing the GPU's parallel processing capabilities. RTX technology provides real-time ray tracing and AI-enhanced rendering for more realistic and immersive results. 10496 GEFORCE RTX 3090

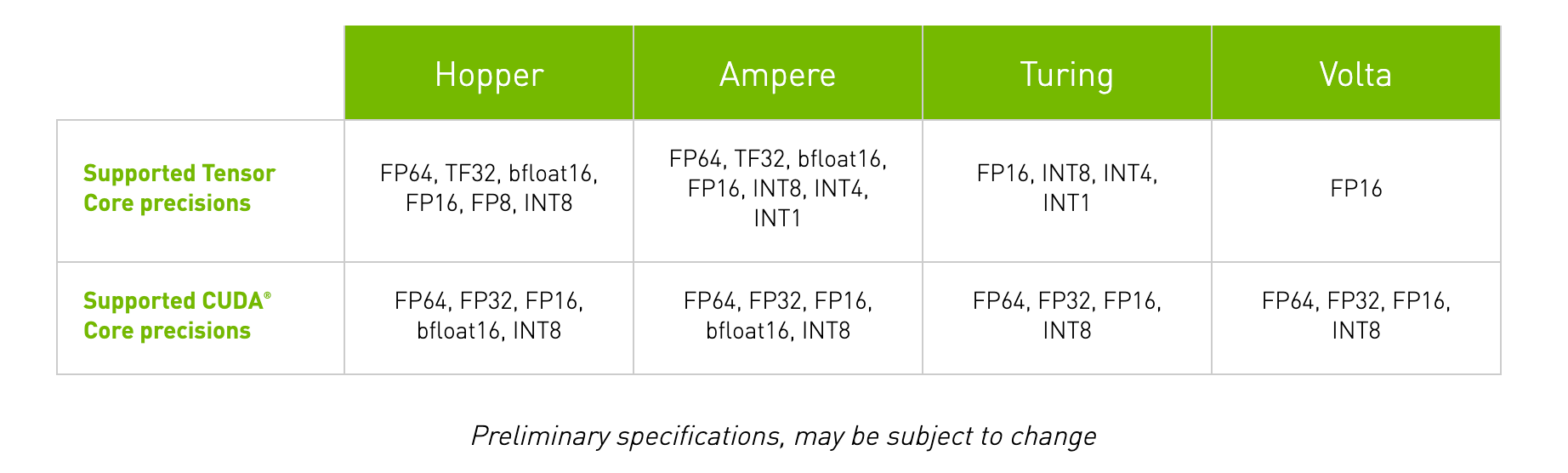

NVIDIA CUDA® Cores

10496

Width

5.4" (138 mm)

Slot

3-Slot

Maximum GPU Temperature (in C)

93

Graphics Card Power (W)

350

Does CUDA cores increase FPS

CUDA cores contribute to gaming performance by rendering graphics and processing game physics. Their parallel processing capabilities enable them to perform a large number of calculations simultaneously, leading to smoother and more realistic graphics and more immersive gaming experiences.Whether for the host computer or the GPU device, all CUDA source code is now processed according to C++ syntax rules. This was not always the case. Earlier versions of CUDA were based on C syntax rules.Yep, at 1080p (lower resolution) the CPU is the likely bottleneck. At 4K (higher resolution) the bottleneck shifts to the other side. This largely depends on the game and its settings too. Lighter games compared to demanding AAA titles will vary drastically. Think about your future needs. If you plan to keep your PC relevant for several years without worrying about upgrading, investing in a high-end GPU like the RTX 4090 can future-proof your system (to an extent), ensuring it stays competitive with upcoming software and games.

Is 1000w enough for 4090 and 13900k : The 1000w will work great for your setup, if you think you'll be doing any overclocking then go ahead and grab the 1200w one. You'll rarely even being drawing close to peak wattage honestly(unless your under heavy load 24/7).

Is 450W enough for RTX 4090 : RTX 4090 SYSTEM REQUIREMENTS

MIN 3 x 8 pin PCIE Connectors + Adapter provided / 450W or greater PCIE GEN 5 power cable.

Why is CUDA so fast

CUDA is based on C and C++, and it allows us to accelerate GPU and their computing tasks by parallelizing them. This means we can divide a program into smaller tasks that can be executed independently on the GPU. This can significantly improve the performance of the program. The 4060 might not outperform the RTX 3060 Ti in all benchmarks [5]. Ultimately, the best choice depends on your budget, gaming needs, and whether you prioritize the latest features or finding a good deal. If you can wait and the rumors hold true, the RTX 4060 seems like a promising upgrade over the 3060.24 GB

It's powered by the NVIDIA Ada Lovelace architecture and comes with 24 GB of G6X memory to deliver the ultimate experience for gamers and creators.

Can Python run CUDA : To run CUDA Python, you'll need the CUDA Toolkit installed on a system with CUDA-capable GPUs. Use this guide to install CUDA. If you don't have a CUDA-capable GPU, you can access one of the thousands of GPUs available from cloud service providers, including Amazon AWS, Microsoft Azure, and IBM SoftLayer.

Antwort How powerful is 1 CUDA core? Weitere Antworten – How many CUDA cores are 4090

16,384 CUDA cores

NVIDIA's Ada Lovelace architecture provides 16,384 CUDA cores to the NVIDIA 4090 GPU compared to the previous generation NVIDIA 3090 with 10,496 CUDA cores.Though less powerful than a CPU core, CUDA cores' strength lies in their numbers. Most advanced GPUs can have hundreds and even thousands of CUDA cores which can simultaneously undertake calculations on different data sets in parallel.The CUDA (Compute Unified Device Architecture) platform is a software framework developed by NVIDIA to expand the capabilities of GPU acceleration. It allows developers to access the raw computing power of CUDA GPUs to process data faster than with traditional CPUs.

What is the highest CUDA core : While a CPU has a few hundred cores at most, a high-end GPU can have as many as thousands of CUDA cores. Each new generation of NVIDIA GPUs comes with more powerful cores. The company's latest flagship GPU, the GeForce RTX 4090, has 16,384 CUDA cores. That's an increase of nearly 40% over the previous generation.

Is the RTX 4090 overkill

The RTX 4090 is impressive, but most people will be better off getting an RTX 4080 Super or a high-end, 30-series graphics card if they can find one. The $999 RTX 4080 Super and $800 RTX 4070 Ti Super will both comfortably outperform a last-gen RTX 3090 Ti.

Is 32GB RAM enough for 4090 : You want at least 32GB of DDR5 for your RTX 4090. If you're running an AMD processor that supports EXPO profiles, make sure you pick up a kit with the correct profile timings.

Cuda offers faster rendering times by utilizing the GPU's parallel processing capabilities. RTX technology provides real-time ray tracing and AI-enhanced rendering for more realistic and immersive results.

10496

GEFORCE RTX 3090

Does CUDA cores increase FPS

CUDA cores contribute to gaming performance by rendering graphics and processing game physics. Their parallel processing capabilities enable them to perform a large number of calculations simultaneously, leading to smoother and more realistic graphics and more immersive gaming experiences.Whether for the host computer or the GPU device, all CUDA source code is now processed according to C++ syntax rules. This was not always the case. Earlier versions of CUDA were based on C syntax rules.Yep, at 1080p (lower resolution) the CPU is the likely bottleneck. At 4K (higher resolution) the bottleneck shifts to the other side. This largely depends on the game and its settings too. Lighter games compared to demanding AAA titles will vary drastically.

Think about your future needs. If you plan to keep your PC relevant for several years without worrying about upgrading, investing in a high-end GPU like the RTX 4090 can future-proof your system (to an extent), ensuring it stays competitive with upcoming software and games.

Is 1000w enough for 4090 and 13900k : The 1000w will work great for your setup, if you think you'll be doing any overclocking then go ahead and grab the 1200w one. You'll rarely even being drawing close to peak wattage honestly(unless your under heavy load 24/7).

Is 450W enough for RTX 4090 : RTX 4090 SYSTEM REQUIREMENTS

MIN 3 x 8 pin PCIE Connectors + Adapter provided / 450W or greater PCIE GEN 5 power cable.

Why is CUDA so fast

CUDA is based on C and C++, and it allows us to accelerate GPU and their computing tasks by parallelizing them. This means we can divide a program into smaller tasks that can be executed independently on the GPU. This can significantly improve the performance of the program.

The 4060 might not outperform the RTX 3060 Ti in all benchmarks [5]. Ultimately, the best choice depends on your budget, gaming needs, and whether you prioritize the latest features or finding a good deal. If you can wait and the rumors hold true, the RTX 4060 seems like a promising upgrade over the 3060.24 GB

It's powered by the NVIDIA Ada Lovelace architecture and comes with 24 GB of G6X memory to deliver the ultimate experience for gamers and creators.

Can Python run CUDA : To run CUDA Python, you'll need the CUDA Toolkit installed on a system with CUDA-capable GPUs. Use this guide to install CUDA. If you don't have a CUDA-capable GPU, you can access one of the thousands of GPUs available from cloud service providers, including Amazon AWS, Microsoft Azure, and IBM SoftLayer.