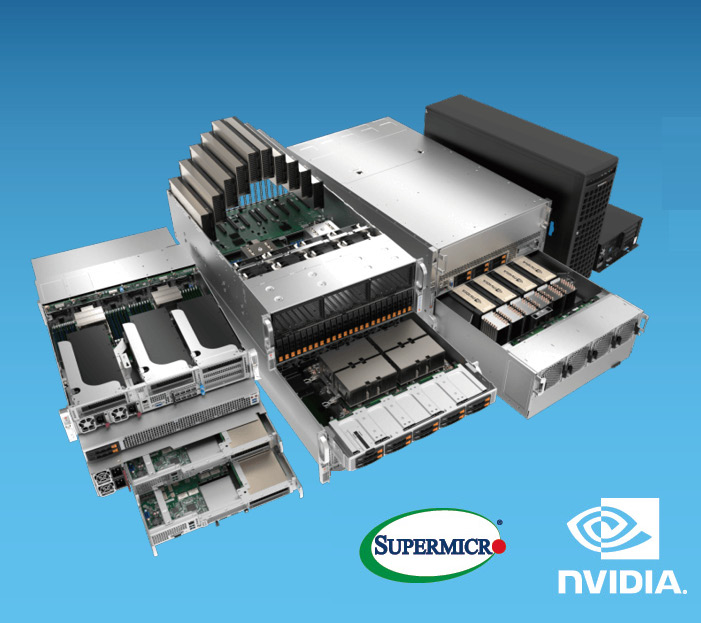

With the right CPUs, graphics, and memory, gaming is possible on a home server. Servers are often used for business purposes, but they can also be used for personal use. You can have multiple GPUs in a server if you have the right hardware. You can have up to eight graphics processing units (GPUs) in a server.A dedicated GPU server is a server with one or more graphics processing units (GPUs) that offers increased power and speed for running computationally intensive tasks, such as video rendering, data analytics, and machine learning.NVIDIA is said to have already supplied OpenAI with 20,000 of its brand-new GPUs. The semiconductor company is believed to be capable of producing a million AI GPUs per year. At this rate, supplying OpenAI with 10 million GPUs would take at least a decade.

How many GPUs can a computer have : Most motherboards will allow up to four GPUs. However, most GPUs have a width of two PCIe slots, so if you plan to use multiple GPUs, you will need a motherboard with enough space between PCIe slots to accommodate these GPUs.

Can a PC have 3 GPUs

Key Takeaways and Recommendations. The sweet spot for most gaming PCs is 1-3 high-end GPUs, paired with a suitable CPU, motherboard, PSU, and cooling.

How many GPUs can Windows handle : 8 GPU

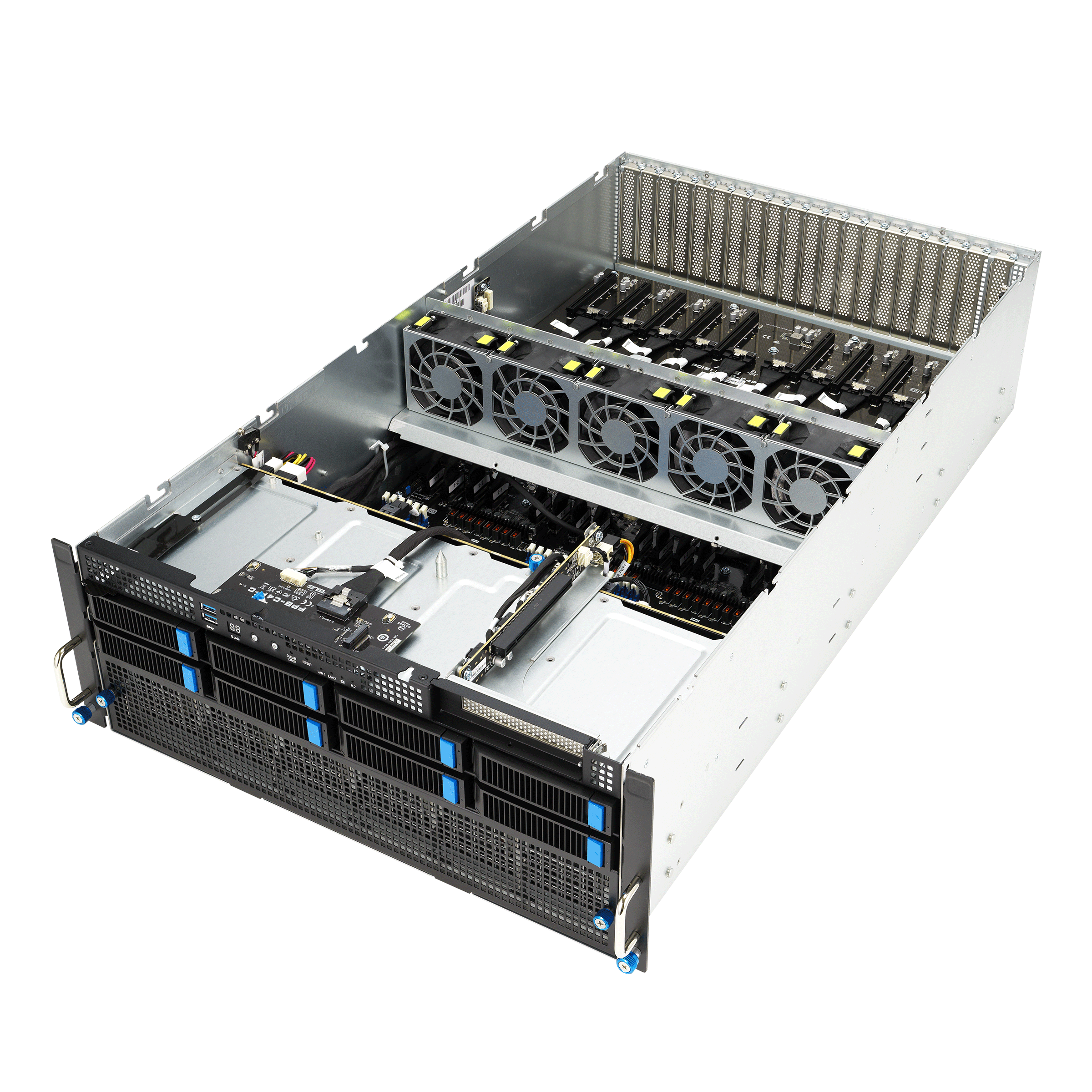

The limitation is the PCI address space allocation of 2Gb. Each card is allocated 256MB of PCI address allocation. Therefore as it is now a PC can support up to 8 GPU max. Assuming you have sufficient slots, power and your driver.

For server models with one processor, you can install one GPU in PCIe slot 1. For server models with two processors, you can install up to two GPUs in PCIe slot 1 and PCIe slot 5, or up to five GPUs in PCIe slots 1, 5, 6, 2, and 3. For more information, see GPU specifications.

Every server or server instance in the cloud requires a CPU to run. However, some servers also include GPUs as additional coprocessors.

How many GPUs does GPT 4 use

For inference, GPT-4: Runs on clusters of 128 A100 GPUs. Leverages multiple forms of parallelism to distribute processing.30,000 NVIDIA GPUs

What you need to know. ChatGPT will require as many as 30,000 NVIDIA GPUs to operate, according to a report by research firm TrendForce. Those calculations are based on the processing power of NVIDIA's A100, which costs between $10,000 and $15,000.The AMD's CrossFire technology was developed by ATI technologies and it allows up to 4 GPUs to connect to a single computer and deliver improved performance.

FPS typically sees significant gains from 1 to 2 GPUs, with diminishing returns beyond 3 cards. In AAA games at 1440p, FPS can improve by 50-80% with a second GPU over a single RTX 3080. At 4K resolution, dual RTX 3090s provide nearly 2x the FPS over a single RTX 3090.

Can you use 3 GPUs : The sweet spot for most gaming PCs is 1-3 high-end GPUs, paired with a suitable CPU, motherboard, PSU, and cooling. Carefully configure components, software, and settings to maximize performance gains and prevent bottlenecks.

Do you need a good GPU for servers : Now, to keep up with the coolest computer tricks and get the best results, a server needs more than just a fancy brain (CPU), good memory, the newest super-fast storage (SSD/NVME drives), and top-notch network cards. It also needs a special kind of power called a GPU.

How much GPU do I need

How to buy a GPU: Which specs matter and which don't Graphics card memory amount: Critical. For 1080p gaming, an 8GB may still suffice, but we'd really prefer at least 12GB or more. 4K gaming cards should generally have 16GB to be safe.

Now, to keep up with the coolest computer tricks and get the best results, a server needs more than just a fancy brain (CPU), good memory, the newest super-fast storage (SSD/NVME drives), and top-notch network cards. It also needs a special kind of power called a GPU.1,024 Nvidia V100

Since GPT-3 is a very large model with 175 billion parameters, it requires very high resources in training. According to this article it takes around 1,024 Nvidia V100 GPUs to train the model, and it costs around $4.6M and 34 days to train the GPT-3 model.

How many GPUs do I need for AI : Also keep in mind that a single GPU like the NVIDIA RTX 3090 or A5000 can provide significant performance and may be enough for your application. Having 2, 3, or even 4 GPUs in a workstation can provide a surprising amount of compute capability and may be sufficient for even many large problems.

Antwort How many GPU in a server? Weitere Antworten – How many GPUs are in a server

With the right CPUs, graphics, and memory, gaming is possible on a home server. Servers are often used for business purposes, but they can also be used for personal use. You can have multiple GPUs in a server if you have the right hardware. You can have up to eight graphics processing units (GPUs) in a server.A dedicated GPU server is a server with one or more graphics processing units (GPUs) that offers increased power and speed for running computationally intensive tasks, such as video rendering, data analytics, and machine learning.NVIDIA is said to have already supplied OpenAI with 20,000 of its brand-new GPUs. The semiconductor company is believed to be capable of producing a million AI GPUs per year. At this rate, supplying OpenAI with 10 million GPUs would take at least a decade.

How many GPUs can a computer have : Most motherboards will allow up to four GPUs. However, most GPUs have a width of two PCIe slots, so if you plan to use multiple GPUs, you will need a motherboard with enough space between PCIe slots to accommodate these GPUs.

Can a PC have 3 GPUs

Key Takeaways and Recommendations. The sweet spot for most gaming PCs is 1-3 high-end GPUs, paired with a suitable CPU, motherboard, PSU, and cooling.

How many GPUs can Windows handle : 8 GPU

The limitation is the PCI address space allocation of 2Gb. Each card is allocated 256MB of PCI address allocation. Therefore as it is now a PC can support up to 8 GPU max. Assuming you have sufficient slots, power and your driver.

For server models with one processor, you can install one GPU in PCIe slot 1. For server models with two processors, you can install up to two GPUs in PCIe slot 1 and PCIe slot 5, or up to five GPUs in PCIe slots 1, 5, 6, 2, and 3. For more information, see GPU specifications.

Every server or server instance in the cloud requires a CPU to run. However, some servers also include GPUs as additional coprocessors.

How many GPUs does GPT 4 use

For inference, GPT-4: Runs on clusters of 128 A100 GPUs. Leverages multiple forms of parallelism to distribute processing.30,000 NVIDIA GPUs

What you need to know. ChatGPT will require as many as 30,000 NVIDIA GPUs to operate, according to a report by research firm TrendForce. Those calculations are based on the processing power of NVIDIA's A100, which costs between $10,000 and $15,000.The AMD's CrossFire technology was developed by ATI technologies and it allows up to 4 GPUs to connect to a single computer and deliver improved performance.

FPS typically sees significant gains from 1 to 2 GPUs, with diminishing returns beyond 3 cards. In AAA games at 1440p, FPS can improve by 50-80% with a second GPU over a single RTX 3080. At 4K resolution, dual RTX 3090s provide nearly 2x the FPS over a single RTX 3090.

Can you use 3 GPUs : The sweet spot for most gaming PCs is 1-3 high-end GPUs, paired with a suitable CPU, motherboard, PSU, and cooling. Carefully configure components, software, and settings to maximize performance gains and prevent bottlenecks.

Do you need a good GPU for servers : Now, to keep up with the coolest computer tricks and get the best results, a server needs more than just a fancy brain (CPU), good memory, the newest super-fast storage (SSD/NVME drives), and top-notch network cards. It also needs a special kind of power called a GPU.

How much GPU do I need

How to buy a GPU: Which specs matter and which don't Graphics card memory amount: Critical. For 1080p gaming, an 8GB may still suffice, but we'd really prefer at least 12GB or more. 4K gaming cards should generally have 16GB to be safe.

Now, to keep up with the coolest computer tricks and get the best results, a server needs more than just a fancy brain (CPU), good memory, the newest super-fast storage (SSD/NVME drives), and top-notch network cards. It also needs a special kind of power called a GPU.1,024 Nvidia V100

Since GPT-3 is a very large model with 175 billion parameters, it requires very high resources in training. According to this article it takes around 1,024 Nvidia V100 GPUs to train the model, and it costs around $4.6M and 34 days to train the GPT-3 model.

How many GPUs do I need for AI : Also keep in mind that a single GPU like the NVIDIA RTX 3090 or A5000 can provide significant performance and may be enough for your application. Having 2, 3, or even 4 GPUs in a workstation can provide a surprising amount of compute capability and may be sufficient for even many large problems.