RTX 4090 is usually faster… As long as your projects don't need more VRAM than the card has… In which case the RTX 6000 will be a better choice.Even though the Nvidia RTX A6000 has a marginally superior GPU configuration than the GeForce RTX 3090, it will not outperform the 3090 gaming laptop in gaming due to the GDDR6X memory of 3090, and the Nvidia RTX A6000 uses slower memory and has a memory bandwidth of 768 GB/s, which is 18% lower than the consumer …

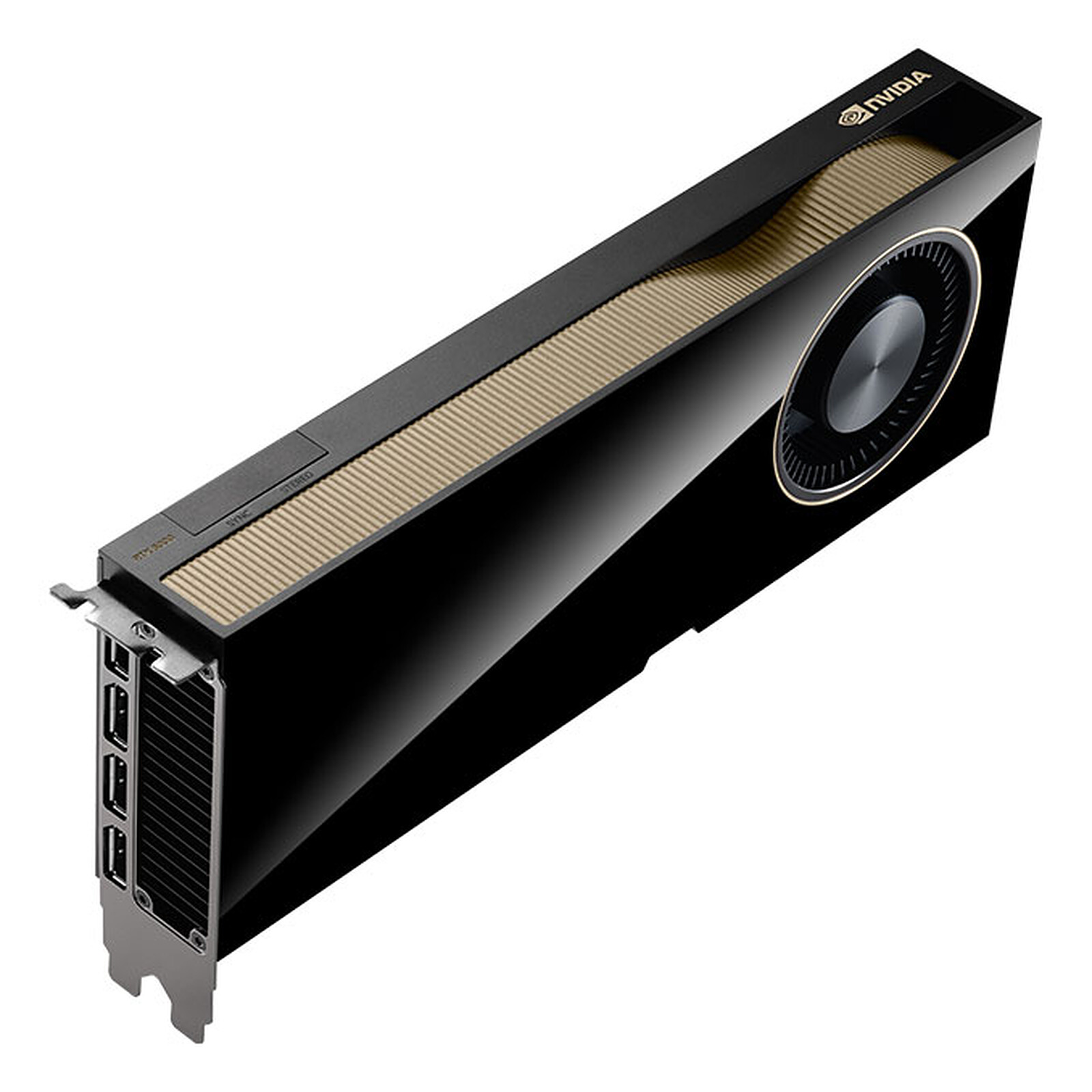

How many CUDA cores does A6000 have : NVIDIA RTX A6000

GPU Architecture

Ampere

CUDA Parallel Processing cores

10,752

NVIDIA Tensor Cores

336

NVIDIA RT Cores

84

GPU Memory

48 GB GDDR6 with ECC

How powerful is 1 CUDA core

CUDA Cores and High-Performance Computing

Each CUDA core is capable of executing a single instruction at a time, but when combined in the thousands, as they are in modern GPUs, they can process large data sets in parallel, significantly reducing computation time.

Is the RTX A6000 good for AI : The A6000 delivers decent performance for AI workloads like smaller model training and inference tasks. While not as fast as the A100, it can handle basic deep-learning workflows efficiently.

14,592 CUDA cores

The flagship H100 GPU (14,592 CUDA cores, 80GB of HBM3 capacity, 5,120-bit memory bus) is priced at a massive $30,000 (average), which Nvidia CEO Jensen Huang calls the first chip designed for generative AI. The RTX 4090 is undoubtedly the king of NVIDIA's modern day graphics cards. Packed with over 16,000 CUDA cores, and 24GB of GDDR6X RAM, this beast of a GPU is truly monstrous in any game you throw at it.

Is RTX 5000 a thing

RTX 5000 accelerates compute-intensive AI workloads, delivering up to 2X higher inference performance over the previous generation, for rapid generation of high-quality images, videos, and 3D assets.The Ultimate Performance for Professionals

Built on the NVIDIA Ada Lovelace GPU architecture, the RTX 6000 combines third-generation RT Cores, fourth-generation Tensor Cores, and next-gen CUDA® cores with 48GB of graphics memory for unprecedented rendering, AI, graphics, and compute performance.16,384 CUDA cores

NVIDIA's Ada Lovelace architecture provides 16,384 CUDA cores to the NVIDIA 4090 GPU compared to the previous generation NVIDIA 3090 with 10,496 CUDA cores. The CUDA (Compute Unified Device Architecture) platform is a software framework developed by NVIDIA to expand the capabilities of GPU acceleration. It allows developers to access the raw computing power of CUDA GPUs to process data faster than with traditional CPUs.

Is the 3090 better than A6000 : The RTX A6000 has twice the amount of VRAM. However, the 3090 has 24GBs GDDR6X while the A6000 has 48GB of ECC GDDR6. This means that the VRAM inside the 3090 is faster, but the A6000 has more VRAM. The possible reason why A6000 does not incorporate GDDR6X is the implementation of ECC.

Which GPU is best for AI : 5 Best GPUs for AI and Deep Learning in 2024

Top 1. NVIDIA A100. The NVIDIA A100 is an excellent GPU for deep learning.

Top 2. NVIDIA RTX A6000. The NVIDIA RTX A6000 is a powerful GPU that is well-suited for deep learning applications.

Top 3. NVIDIA RTX 4090.

Top 4. NVIDIA A40.

Top 5. NVIDIA V100.

Is H100 faster than A100

What are the Performance Differences Between A100 and H100 According to benchmarks by NVIDIA and independent parties, the H100 offers double the computation speed of the A100. This performance boost has two major implications: Engineering teams can iterate faster if workloads take half the time to complete. The RTX 4090 is impressive, but most people will be better off getting an RTX 4080 Super or a high-end, 30-series graphics card if they can find one. The $999 RTX 4080 Super and $800 RTX 4070 Ti Super will both comfortably outperform a last-gen RTX 3090 Ti.The GeForce RTX 2050 Mobile is a mobile graphics chip by NVIDIA, launched on December 17th, 2021. Built on the 8 nm process, and based on the GA107 graphics processor, the chip supports DirectX 12 Ultimate.

Is RTX 4000 Real : The NVIDIA Quadro RTX 4000 delivers GPU accelerated ray tracing, deep learning, and advanced shading in an accessible single slot form factor. It gives designers the power to accelerate their creative efforts with faster time to insight and faster time to solution.

Antwort How many CUDA cores does RTX 6000 have? Weitere Antworten – How many CUDA cores are in RTX 6000

18,176

NVIDIA RTX 6000 Ada Generation

RTX 4090 is usually faster… As long as your projects don't need more VRAM than the card has… In which case the RTX 6000 will be a better choice.Even though the Nvidia RTX A6000 has a marginally superior GPU configuration than the GeForce RTX 3090, it will not outperform the 3090 gaming laptop in gaming due to the GDDR6X memory of 3090, and the Nvidia RTX A6000 uses slower memory and has a memory bandwidth of 768 GB/s, which is 18% lower than the consumer …

How many CUDA cores does A6000 have : NVIDIA RTX A6000

How powerful is 1 CUDA core

CUDA Cores and High-Performance Computing

Each CUDA core is capable of executing a single instruction at a time, but when combined in the thousands, as they are in modern GPUs, they can process large data sets in parallel, significantly reducing computation time.

Is the RTX A6000 good for AI : The A6000 delivers decent performance for AI workloads like smaller model training and inference tasks. While not as fast as the A100, it can handle basic deep-learning workflows efficiently.

14,592 CUDA cores

The flagship H100 GPU (14,592 CUDA cores, 80GB of HBM3 capacity, 5,120-bit memory bus) is priced at a massive $30,000 (average), which Nvidia CEO Jensen Huang calls the first chip designed for generative AI.

The RTX 4090 is undoubtedly the king of NVIDIA's modern day graphics cards. Packed with over 16,000 CUDA cores, and 24GB of GDDR6X RAM, this beast of a GPU is truly monstrous in any game you throw at it.

Is RTX 5000 a thing

RTX 5000 accelerates compute-intensive AI workloads, delivering up to 2X higher inference performance over the previous generation, for rapid generation of high-quality images, videos, and 3D assets.The Ultimate Performance for Professionals

Built on the NVIDIA Ada Lovelace GPU architecture, the RTX 6000 combines third-generation RT Cores, fourth-generation Tensor Cores, and next-gen CUDA® cores with 48GB of graphics memory for unprecedented rendering, AI, graphics, and compute performance.16,384 CUDA cores

NVIDIA's Ada Lovelace architecture provides 16,384 CUDA cores to the NVIDIA 4090 GPU compared to the previous generation NVIDIA 3090 with 10,496 CUDA cores.

The CUDA (Compute Unified Device Architecture) platform is a software framework developed by NVIDIA to expand the capabilities of GPU acceleration. It allows developers to access the raw computing power of CUDA GPUs to process data faster than with traditional CPUs.

Is the 3090 better than A6000 : The RTX A6000 has twice the amount of VRAM. However, the 3090 has 24GBs GDDR6X while the A6000 has 48GB of ECC GDDR6. This means that the VRAM inside the 3090 is faster, but the A6000 has more VRAM. The possible reason why A6000 does not incorporate GDDR6X is the implementation of ECC.

Which GPU is best for AI : 5 Best GPUs for AI and Deep Learning in 2024

Is H100 faster than A100

What are the Performance Differences Between A100 and H100 According to benchmarks by NVIDIA and independent parties, the H100 offers double the computation speed of the A100. This performance boost has two major implications: Engineering teams can iterate faster if workloads take half the time to complete.

The RTX 4090 is impressive, but most people will be better off getting an RTX 4080 Super or a high-end, 30-series graphics card if they can find one. The $999 RTX 4080 Super and $800 RTX 4070 Ti Super will both comfortably outperform a last-gen RTX 3090 Ti.The GeForce RTX 2050 Mobile is a mobile graphics chip by NVIDIA, launched on December 17th, 2021. Built on the 8 nm process, and based on the GA107 graphics processor, the chip supports DirectX 12 Ultimate.

Is RTX 4000 Real : The NVIDIA Quadro RTX 4000 delivers GPU accelerated ray tracing, deep learning, and advanced shading in an accessible single slot form factor. It gives designers the power to accelerate their creative efforts with faster time to insight and faster time to solution.