CUDA® is a parallel computing platform and programming model developed by NVIDIA for general computing on graphical processing units (GPUs). With CUDA, developers are able to dramatically speed up computing applications by harnessing the power of GPUs.To use CUDA on your system, you will need the following installed: A CUDA-capable GPU. A supported version of Linux with a gcc compiler and toolchain.The memory allocated in host is by default pageable memory. The data at this memory location is usable by the host. To transfer this data to the device, the CUDA run time copies this memory to a temporary pinned memory and then transfers to the device memory. Hence, there are two memory transfers.

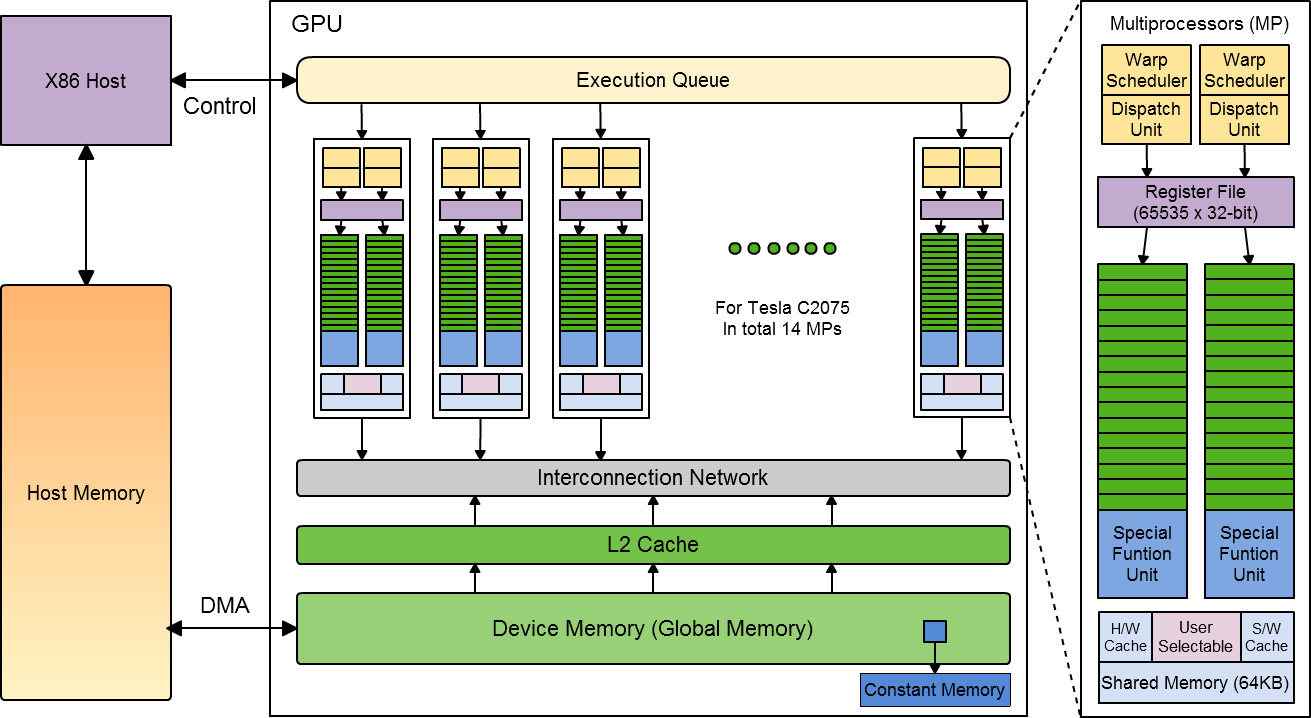

Is CUDA constant memory cached : Yes. They physical backing for the constant logical space is GPU DRAM memory. The constant memory system consists of this physical backing, plus a per-SM constant cache resource.

Is CUDA faster than CPU

The CUDA (Compute Unified Device Architecture) platform is a software framework developed by NVIDIA to expand the capabilities of GPU acceleration. It allows developers to access the raw computing power of CUDA GPUs to process data faster than with traditional CPUs.

Is CUDA limited to NVIDIA GPU only : Is CUDA only for Nvidia Yes. CUDA is a technology which is proprietary to Nvidia, and will not work on GPUs produced by Intel or AMD. CUDA is not needed in most cases, but it is in some, for example in some specialised rendering software (e.g. Octanerender), and CUDA-based deep learning.

The CUDA code cannot run directly on the CPU but can be emulated. Threads are computed in parallel as part of a vectorized loop. Unlike OpenCL, CUDA-enabled GPUs are only available from Nvidia as it is proprietary. Attempts to implement CUDA on other GPUs include: Project Coriander: Converts CUDA C++11 source to OpenCL 1.2 C. A fork of CUDA-on-CL intended to run TensorFlow. CU2CL: Convert CUDA 3.2 C++ to OpenCL C.

How much memory does CUDA have

The CUDA context needs approx. 600-1000MB of GPU memory depending on the used CUDA version as well as device.The main difference between a CPU and a CUDA GPU is that the CPU is designed to handle a single task simultaneously. In contrast, a CUDA GPU is designed to handle numerous tasks simultaneously. CUDA GPUs use a parallel computing model, meaning many calculations co-occur instead of executing in sequence.Each GPU core usually has one or more dedicated shader data caches. In earlier GPU designs shaders could only read data from memory but not write to it, as typically shaders only needed to access texture and buffer inputs to perform their tasks, so per core data caches have been traditionally read-only. Cuda offers faster rendering times by utilizing the GPU's parallel processing capabilities. RTX technology provides real-time ray tracing and AI-enhanced rendering for more realistic and immersive results.

Why is CUDA so fast : CUDA is based on C and C++, and it allows us to accelerate GPU and their computing tasks by parallelizing them. This means we can divide a program into smaller tasks that can be executed independently on the GPU. This can significantly improve the performance of the program.

Do I need a NVIDIA GPU for CUDA : In order to run a CUDA application, the system should have a CUDA enabled GPU and an NVIDIA display driver that is compatible with the CUDA Toolkit that was used to build the application itself.

Can I run CUDA with Intel GPU

In short, NO. Intel doesn't support CUDA drivers yet in any of its GPUs. Although you can find some possible workarounds like this. If your primary motive is for machine learning based tasks, you can still consider using Google Colab or its likes. In the context of GPU architecture, CUDA cores are the equivalent of cores in a CPU, but there is a fundamental difference in their design and function.GPUs with lots of CUDA cores can perform certain types of complex calculations much faster than those with fewer cores. This is why CUDA cores are often seen as a good indicator of a GPU's overall performance. NVIDIA CUDA cores are the heart of GPUs.

Is it safe to install CUDA : No, it won't be a problem and this is what you would have to do to use CUDA on the pure-windows side as well as on the WSL2 side. Other expectations/requirements still apply. For example the CUDA toolkit versions installed in each location should be consistent with the GPU driver you have already installed.

Antwort Does CUDA use GPU memory? Weitere Antworten – Does CUDA use GPU

CUDA® is a parallel computing platform and programming model developed by NVIDIA for general computing on graphical processing units (GPUs). With CUDA, developers are able to dramatically speed up computing applications by harnessing the power of GPUs.To use CUDA on your system, you will need the following installed: A CUDA-capable GPU. A supported version of Linux with a gcc compiler and toolchain.The memory allocated in host is by default pageable memory. The data at this memory location is usable by the host. To transfer this data to the device, the CUDA run time copies this memory to a temporary pinned memory and then transfers to the device memory. Hence, there are two memory transfers.

Is CUDA constant memory cached : Yes. They physical backing for the constant logical space is GPU DRAM memory. The constant memory system consists of this physical backing, plus a per-SM constant cache resource.

Is CUDA faster than CPU

The CUDA (Compute Unified Device Architecture) platform is a software framework developed by NVIDIA to expand the capabilities of GPU acceleration. It allows developers to access the raw computing power of CUDA GPUs to process data faster than with traditional CPUs.

Is CUDA limited to NVIDIA GPU only : Is CUDA only for Nvidia Yes. CUDA is a technology which is proprietary to Nvidia, and will not work on GPUs produced by Intel or AMD. CUDA is not needed in most cases, but it is in some, for example in some specialised rendering software (e.g. Octanerender), and CUDA-based deep learning.

The CUDA code cannot run directly on the CPU but can be emulated. Threads are computed in parallel as part of a vectorized loop.

Unlike OpenCL, CUDA-enabled GPUs are only available from Nvidia as it is proprietary. Attempts to implement CUDA on other GPUs include: Project Coriander: Converts CUDA C++11 source to OpenCL 1.2 C. A fork of CUDA-on-CL intended to run TensorFlow. CU2CL: Convert CUDA 3.2 C++ to OpenCL C.

How much memory does CUDA have

The CUDA context needs approx. 600-1000MB of GPU memory depending on the used CUDA version as well as device.The main difference between a CPU and a CUDA GPU is that the CPU is designed to handle a single task simultaneously. In contrast, a CUDA GPU is designed to handle numerous tasks simultaneously. CUDA GPUs use a parallel computing model, meaning many calculations co-occur instead of executing in sequence.Each GPU core usually has one or more dedicated shader data caches. In earlier GPU designs shaders could only read data from memory but not write to it, as typically shaders only needed to access texture and buffer inputs to perform their tasks, so per core data caches have been traditionally read-only.

Cuda offers faster rendering times by utilizing the GPU's parallel processing capabilities. RTX technology provides real-time ray tracing and AI-enhanced rendering for more realistic and immersive results.

Why is CUDA so fast : CUDA is based on C and C++, and it allows us to accelerate GPU and their computing tasks by parallelizing them. This means we can divide a program into smaller tasks that can be executed independently on the GPU. This can significantly improve the performance of the program.

Do I need a NVIDIA GPU for CUDA : In order to run a CUDA application, the system should have a CUDA enabled GPU and an NVIDIA display driver that is compatible with the CUDA Toolkit that was used to build the application itself.

Can I run CUDA with Intel GPU

In short, NO. Intel doesn't support CUDA drivers yet in any of its GPUs. Although you can find some possible workarounds like this. If your primary motive is for machine learning based tasks, you can still consider using Google Colab or its likes.

In the context of GPU architecture, CUDA cores are the equivalent of cores in a CPU, but there is a fundamental difference in their design and function.GPUs with lots of CUDA cores can perform certain types of complex calculations much faster than those with fewer cores. This is why CUDA cores are often seen as a good indicator of a GPU's overall performance. NVIDIA CUDA cores are the heart of GPUs.

Is it safe to install CUDA : No, it won't be a problem and this is what you would have to do to use CUDA on the pure-windows side as well as on the WSL2 side. Other expectations/requirements still apply. For example the CUDA toolkit versions installed in each location should be consistent with the GPU driver you have already installed.