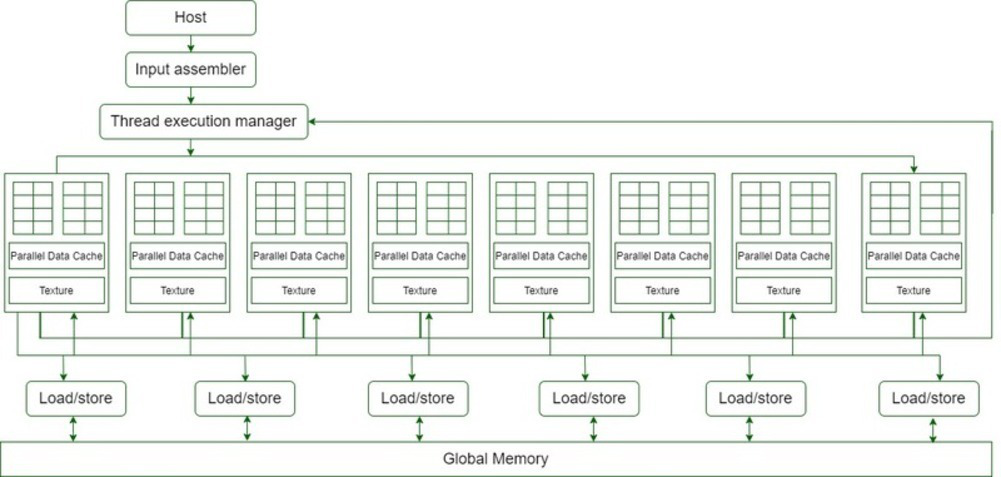

CUDA® is a parallel computing platform and programming model that enables dramatic increases in computing performance by harnessing the power of the graphics processing unit (GPU).The CUDA code cannot run directly on the CPU but can be emulated. Threads are computed in parallel as part of a vectorized loop.CUDA (Compute Unified Device Architecture) is a parallel computing platform and programming model developed by NVIDIA for general-purpose computing on GPUs (Graphics Processing Units). CUDA is designed specifically for NVIDIA GPUs and is not compatible with GPUs from other manufacturers like AMD or Intel.

Are CUDA cores used in gaming : CUDA cores contribute to gaming performance by rendering graphics and processing game physics. Their parallel processing capabilities enable them to perform a large number of calculations simultaneously, leading to smoother and more realistic graphics and more immersive gaming experiences.

Is CUDA faster than CPU

It is interesting to note that it is faster to perform the CPU task for small matrixes. Where for larger arrays, the CUDA outperforms the CPU by large margins. On a large scale, it looks like the CUDA times are not increasing, but if we only plot the CUDA times, we can see that it also increases linearly.

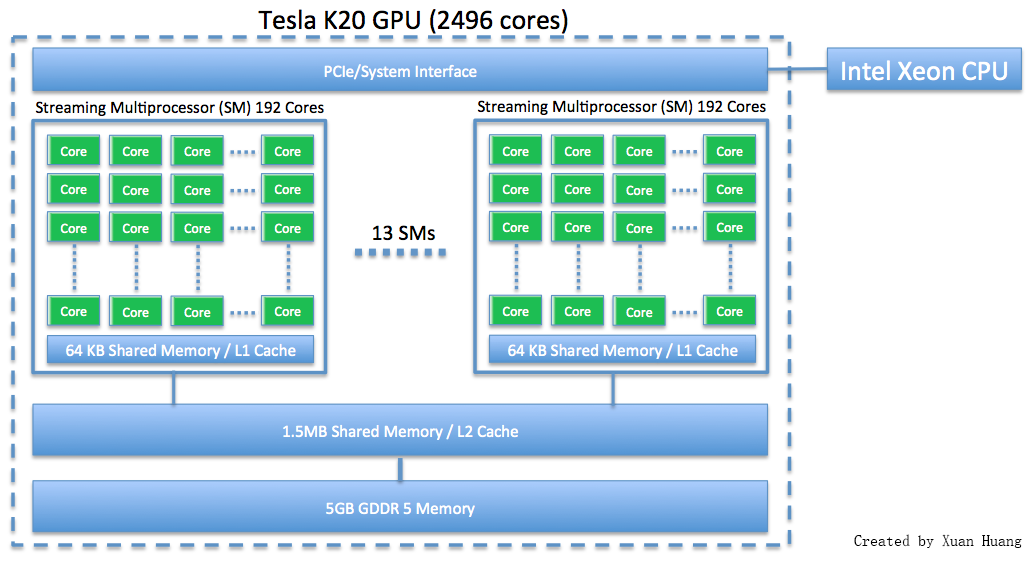

Why do AI use GPU instead of CPU : A GPU contains hundreds or thousands of cores, allowing for parallel computing and lightning-fast graphics output. The GPUs also include more transistors than CPUs. Because of its faster clock speed and fewer cores, the CPU is more suited to tackling daily single-threaded tasks than AI workloads.

CUDA technology is exclusive to NVIDIA, and it's not directly compatible with AMD GPUs. If you're facing issues with AI tools preferring CUDA over AMD's ROCm, consider checking for software updates, exploring alternative tools that support AMD, and engaging with community forums or developers for potential solutions. Unlike OpenCL, CUDA-enabled GPUs are only available from Nvidia as it is proprietary. Attempts to implement CUDA on other GPUs include: Project Coriander: Converts CUDA C++11 source to OpenCL 1.2 C. A fork of CUDA-on-CL intended to run TensorFlow. CU2CL: Convert CUDA 3.2 C++ to OpenCL C.

How many CUDA cores are 4090

16,384 CUDA cores

NVIDIA's Ada Lovelace architecture provides 16,384 CUDA cores to the NVIDIA 4090 GPU compared to the previous generation NVIDIA 3090 with 10,496 CUDA cores.CUDA is based on C and C++, and it allows us to accelerate GPU and their computing tasks by parallelizing them. This means we can divide a program into smaller tasks that can be executed independently on the GPU. This can significantly improve the performance of the program.Cuda offers faster rendering times by utilizing the GPU's parallel processing capabilities. RTX technology provides real-time ray tracing and AI-enhanced rendering for more realistic and immersive results. A CPU can never be fully replaced by a GPU: a GPU complements CPU architecture by allowing repetitive calculations within an application to be run in parallel while the main program continues to run on the CPU.

How much is an H100 GPU : NVIDIA H100 80GB GPU – Our Price $28,138.70.

Can AMD chips run CUDA : And one of the most interesting parts of the amd's rocom is that you can actually kind of emulate. And run Kuda. So professional software that uses good acceleration like for example blender. Will run

Is CUDA C or C++

Whether for the host computer or the GPU device, all CUDA source code is now processed according to C++ syntax rules. This was not always the case. Earlier versions of CUDA were based on C syntax rules. In general, the more such cores a graphics card has, the better its performance will be for those types of tasks. Another significant benefit of the CUDA cores is that they can help improve power efficiency since they can offer a higher level of performance per watt than traditional CPU cores.You want at least 32GB of DDR5 for your RTX 4090. If you're running an AMD processor that supports EXPO profiles, make sure you pick up a kit with the correct profile timings.

Is the RTX 4090 overkill : The RTX 4090 is impressive, but most people will be better off getting an RTX 4080 Super or a high-end, 30-series graphics card if they can find one. The $999 RTX 4080 Super and $800 RTX 4070 Ti Super will both comfortably outperform a last-gen RTX 3090 Ti.

Antwort Does CUDA use CPU? Weitere Antworten – Is CUDA a CPU or GPU

CUDA® is a parallel computing platform and programming model that enables dramatic increases in computing performance by harnessing the power of the graphics processing unit (GPU).The CUDA code cannot run directly on the CPU but can be emulated. Threads are computed in parallel as part of a vectorized loop.CUDA (Compute Unified Device Architecture) is a parallel computing platform and programming model developed by NVIDIA for general-purpose computing on GPUs (Graphics Processing Units). CUDA is designed specifically for NVIDIA GPUs and is not compatible with GPUs from other manufacturers like AMD or Intel.

Are CUDA cores used in gaming : CUDA cores contribute to gaming performance by rendering graphics and processing game physics. Their parallel processing capabilities enable them to perform a large number of calculations simultaneously, leading to smoother and more realistic graphics and more immersive gaming experiences.

Is CUDA faster than CPU

It is interesting to note that it is faster to perform the CPU task for small matrixes. Where for larger arrays, the CUDA outperforms the CPU by large margins. On a large scale, it looks like the CUDA times are not increasing, but if we only plot the CUDA times, we can see that it also increases linearly.

Why do AI use GPU instead of CPU : A GPU contains hundreds or thousands of cores, allowing for parallel computing and lightning-fast graphics output. The GPUs also include more transistors than CPUs. Because of its faster clock speed and fewer cores, the CPU is more suited to tackling daily single-threaded tasks than AI workloads.

CUDA technology is exclusive to NVIDIA, and it's not directly compatible with AMD GPUs. If you're facing issues with AI tools preferring CUDA over AMD's ROCm, consider checking for software updates, exploring alternative tools that support AMD, and engaging with community forums or developers for potential solutions.

Unlike OpenCL, CUDA-enabled GPUs are only available from Nvidia as it is proprietary. Attempts to implement CUDA on other GPUs include: Project Coriander: Converts CUDA C++11 source to OpenCL 1.2 C. A fork of CUDA-on-CL intended to run TensorFlow. CU2CL: Convert CUDA 3.2 C++ to OpenCL C.

How many CUDA cores are 4090

16,384 CUDA cores

NVIDIA's Ada Lovelace architecture provides 16,384 CUDA cores to the NVIDIA 4090 GPU compared to the previous generation NVIDIA 3090 with 10,496 CUDA cores.CUDA is based on C and C++, and it allows us to accelerate GPU and their computing tasks by parallelizing them. This means we can divide a program into smaller tasks that can be executed independently on the GPU. This can significantly improve the performance of the program.Cuda offers faster rendering times by utilizing the GPU's parallel processing capabilities. RTX technology provides real-time ray tracing and AI-enhanced rendering for more realistic and immersive results.

A CPU can never be fully replaced by a GPU: a GPU complements CPU architecture by allowing repetitive calculations within an application to be run in parallel while the main program continues to run on the CPU.

How much is an H100 GPU : NVIDIA H100 80GB GPU – Our Price $28,138.70.

Can AMD chips run CUDA : And one of the most interesting parts of the amd's rocom is that you can actually kind of emulate. And run Kuda. So professional software that uses good acceleration like for example blender. Will run

Is CUDA C or C++

Whether for the host computer or the GPU device, all CUDA source code is now processed according to C++ syntax rules. This was not always the case. Earlier versions of CUDA were based on C syntax rules.

In general, the more such cores a graphics card has, the better its performance will be for those types of tasks. Another significant benefit of the CUDA cores is that they can help improve power efficiency since they can offer a higher level of performance per watt than traditional CPU cores.You want at least 32GB of DDR5 for your RTX 4090. If you're running an AMD processor that supports EXPO profiles, make sure you pick up a kit with the correct profile timings.

Is the RTX 4090 overkill : The RTX 4090 is impressive, but most people will be better off getting an RTX 4080 Super or a high-end, 30-series graphics card if they can find one. The $999 RTX 4080 Super and $800 RTX 4070 Ti Super will both comfortably outperform a last-gen RTX 3090 Ti.