CUDA cores enhance AI performance by accelerating the training of models and speeding up inference. Their parallel processing capabilities enable them to perform a large number of calculations simultaneously, leading to faster training times and quicker response times in applications that require real-time predictions.The CUDA (Compute Unified Device Architecture) platform is a software framework developed by NVIDIA to expand the capabilities of GPU acceleration. It allows developers to access the raw computing power of CUDA GPUs to process data faster than with traditional CPUs.Tasks that don't require sequential execution can be run in parallel with other tasks on GPU using CUDA. With language support of C, C++, and Fortran, it is extremely easy to offload computation-intensive tasks to Nvidia's GPU using CUDA.

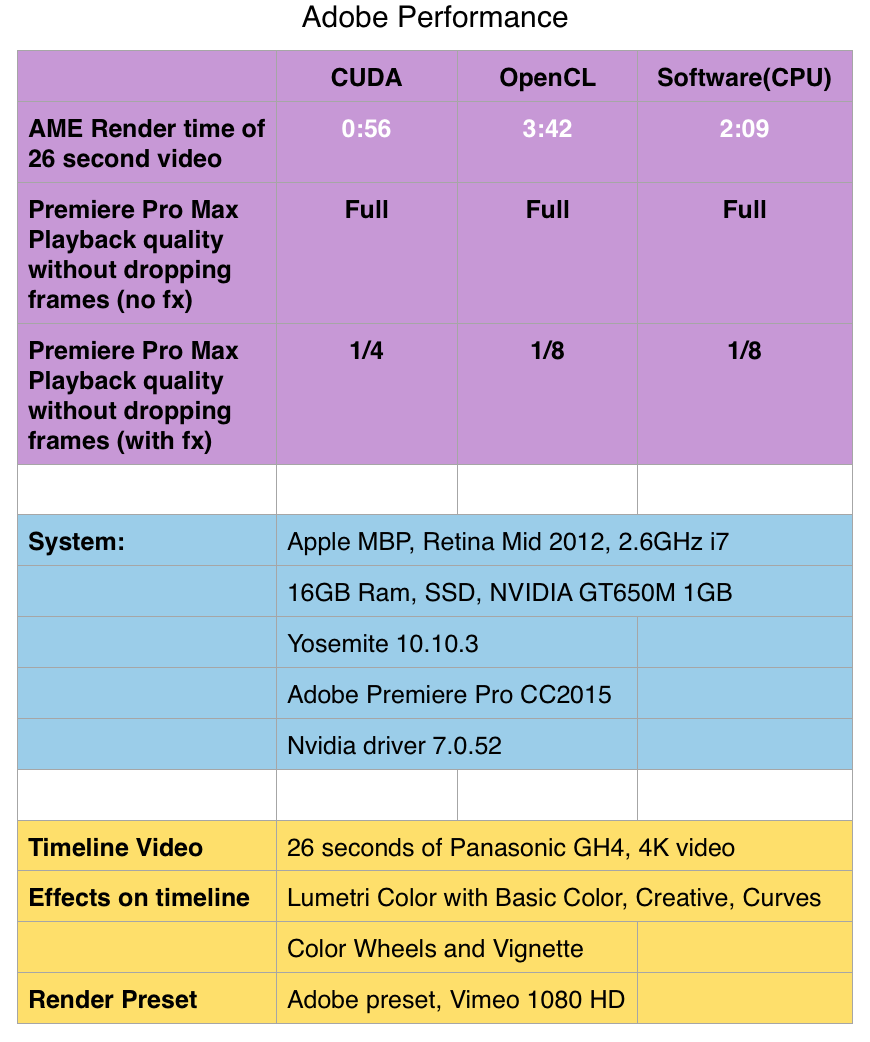

What are the advantages of CUDA : CUDA performs better than OpenCL with up to 7.34x speedup, making it a more efficient choice for parallel and distributed computing. CUDA allows for parallel programming on GPUs, enabling the use of a desktop PC for tasks that previously required a large cluster of PCs or access to a HPC facility.

Is RTX faster than CUDA

Cuda offers faster rendering times by utilizing the GPU's parallel processing capabilities. RTX technology provides real-time ray tracing and AI-enhanced rendering for more realistic and immersive results.

Does installing CUDA affect gaming performance : Installing the CUDA driver package has no impact on performance. If you run CUDA-accelerated apps and graphical applications at the same time, on the same GPU, there are of course performance interactions, as the GPU represents a shared resource.

The fastest PyTorch on the GPU is 1.8s (both torch. compile and jit. script ), compared to 0.3s for CUDA. The CUDA code cannot run directly on the CPU but can be emulated. Threads are computed in parallel as part of a vectorized loop.

Is CUDA programming worth it

If that task is GPU programming, CUDA is the best language I know for that, much better than SyCL, OpenCL, Vulkan / OpenGL + shaders, etc. If these other technologies would be better, I would use them instead. CUDA can't be best if it's tied to a single GPU.If you have an Nvidia card, then use CUDA. It's considered faster than OpenCL much of the time. Note too that Nvidia cards do support OpenCL. The general consensus is that they're not as good at it as AMD cards are, but they're coming closer all the time.In short, these are special types of cores that are designed to speed up certain types of calculations, particularly those that are needed for graphics processing. GPUs with lots of CUDA cores can perform certain types of complex calculations much faster than those with fewer cores. We'll assume that you've done the first step and checked your software, and that whatever you use will support both options. If you have an Nvidia card, then use CUDA. It's considered faster than OpenCL much of the time. Note too that Nvidia cards do support OpenCL.

Which GPU is best for CUDA : Nvidia GeForce RTX 4070 Super

✅You want some creative performance as well: With its strong CUDA backbone, the RTX 4070 Super is a great option for those looking to get into creative content work.

Why is CUDA so slow : Because you are not measuring the actual kernel time to transfer the data but “something else” due to the mentioned async execution. If you don't synchronize the code, the CPU will just run ahead and is able to start and stop the timer while the data is still being transferred.

How many CUDA cores are 4090

16,384 CUDA cores

NVIDIA's Ada Lovelace architecture provides 16,384 CUDA cores to the NVIDIA 4090 GPU compared to the previous generation NVIDIA 3090 with 10,496 CUDA cores. CUDA (Compute Unified Device Architecture) is a parallel computing platform and programming model developed by NVIDIA for general-purpose computing on GPUs (Graphics Processing Units). CUDA is designed specifically for NVIDIA GPUs and is not compatible with GPUs from other manufacturers like AMD or Intel.While CUDA cores are more specialized than other types of cores, they offer a significant performance boost for certain types of applications such as time-intensive workloads, gaming, and deep learning. If your application can benefit from parallel computing, then CUDA cores can offer a major performance advantage.

Is CUDA necessary for PyTorch : Your locally CUDA toolkit will be used if you build PyTorch from source or a custom CUDA extension. You won''t need it to execute PyTorch workloads as the binaries (pip wheels and conda binaries) install all needed requirements.

Antwort Does CUDA help performance? Weitere Antworten – Does CUDA improve performance

CUDA cores enhance AI performance by accelerating the training of models and speeding up inference. Their parallel processing capabilities enable them to perform a large number of calculations simultaneously, leading to faster training times and quicker response times in applications that require real-time predictions.The CUDA (Compute Unified Device Architecture) platform is a software framework developed by NVIDIA to expand the capabilities of GPU acceleration. It allows developers to access the raw computing power of CUDA GPUs to process data faster than with traditional CPUs.Tasks that don't require sequential execution can be run in parallel with other tasks on GPU using CUDA. With language support of C, C++, and Fortran, it is extremely easy to offload computation-intensive tasks to Nvidia's GPU using CUDA.

What are the advantages of CUDA : CUDA performs better than OpenCL with up to 7.34x speedup, making it a more efficient choice for parallel and distributed computing. CUDA allows for parallel programming on GPUs, enabling the use of a desktop PC for tasks that previously required a large cluster of PCs or access to a HPC facility.

Is RTX faster than CUDA

Cuda offers faster rendering times by utilizing the GPU's parallel processing capabilities. RTX technology provides real-time ray tracing and AI-enhanced rendering for more realistic and immersive results.

Does installing CUDA affect gaming performance : Installing the CUDA driver package has no impact on performance. If you run CUDA-accelerated apps and graphical applications at the same time, on the same GPU, there are of course performance interactions, as the GPU represents a shared resource.

The fastest PyTorch on the GPU is 1.8s (both torch. compile and jit. script ), compared to 0.3s for CUDA.

The CUDA code cannot run directly on the CPU but can be emulated. Threads are computed in parallel as part of a vectorized loop.

Is CUDA programming worth it

If that task is GPU programming, CUDA is the best language I know for that, much better than SyCL, OpenCL, Vulkan / OpenGL + shaders, etc. If these other technologies would be better, I would use them instead. CUDA can't be best if it's tied to a single GPU.If you have an Nvidia card, then use CUDA. It's considered faster than OpenCL much of the time. Note too that Nvidia cards do support OpenCL. The general consensus is that they're not as good at it as AMD cards are, but they're coming closer all the time.In short, these are special types of cores that are designed to speed up certain types of calculations, particularly those that are needed for graphics processing. GPUs with lots of CUDA cores can perform certain types of complex calculations much faster than those with fewer cores.

We'll assume that you've done the first step and checked your software, and that whatever you use will support both options. If you have an Nvidia card, then use CUDA. It's considered faster than OpenCL much of the time. Note too that Nvidia cards do support OpenCL.

Which GPU is best for CUDA : Nvidia GeForce RTX 4070 Super

✅You want some creative performance as well: With its strong CUDA backbone, the RTX 4070 Super is a great option for those looking to get into creative content work.

Why is CUDA so slow : Because you are not measuring the actual kernel time to transfer the data but “something else” due to the mentioned async execution. If you don't synchronize the code, the CPU will just run ahead and is able to start and stop the timer while the data is still being transferred.

How many CUDA cores are 4090

16,384 CUDA cores

NVIDIA's Ada Lovelace architecture provides 16,384 CUDA cores to the NVIDIA 4090 GPU compared to the previous generation NVIDIA 3090 with 10,496 CUDA cores.

CUDA (Compute Unified Device Architecture) is a parallel computing platform and programming model developed by NVIDIA for general-purpose computing on GPUs (Graphics Processing Units). CUDA is designed specifically for NVIDIA GPUs and is not compatible with GPUs from other manufacturers like AMD or Intel.While CUDA cores are more specialized than other types of cores, they offer a significant performance boost for certain types of applications such as time-intensive workloads, gaming, and deep learning. If your application can benefit from parallel computing, then CUDA cores can offer a major performance advantage.

Is CUDA necessary for PyTorch : Your locally CUDA toolkit will be used if you build PyTorch from source or a custom CUDA extension. You won''t need it to execute PyTorch workloads as the binaries (pip wheels and conda binaries) install all needed requirements.