OpenAI is now among the biggest consumers of Nvidia servers clusters on the planet, and the performance of its Infiniband equipment apparently has been unreliable at times, prompting the startup to move away from it.Inference. Drive breakthrough AI inference performance. NVIDIA offers performance, efficiency, and responsiveness critical to powering the next generation of AI inference—in the cloud, in the data center, at the network edge, and in embedded devices.Developers can also tap into the potential of Chat with RTX. Built upon the open-source TensorRT-LLM RAG Developer Reference Project, the app serves as a springboard for crafting custom RAG-based applications that further harness the power of RTX acceleration.

What is generative AI in Nvidia : Generative AI describes technologies that are used to generate new content based on a variety of inputs. In recent time, Generative AI involves the use of neural networks to identify patterns and structures within existing data to generate new content.

Is ChatGPT using Nvidia

It was developed by NVIDIA and Microsoft Azure, in collaboration with OpenAI, to host ChatGPT and other large language models (LLMs) at any scale.

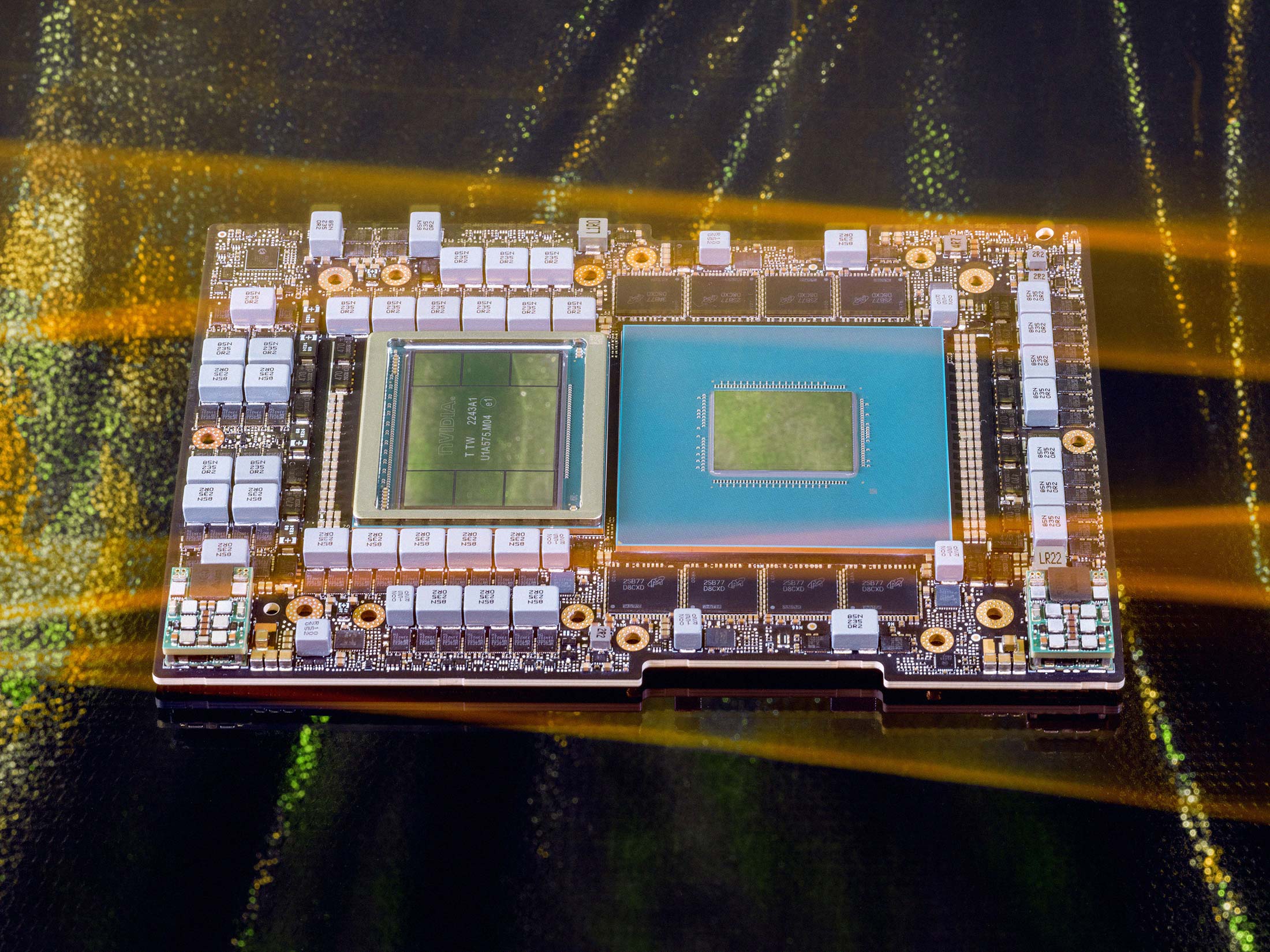

Does ChatGPT run on CPU or GPU : ChatGPT relies heavily on GPUs for its AI training, as they can handle massive amounts of data and computations faster than CPUs.

Nvidia has identified Chinese tech company Huawei as one of its top competitors in various categories such as chip production, AI and cloud services. OpenAI LP

Chat GPT is owned by OpenAI LP, an artificial intelligence research lab consisting of the for-profit OpenAI LP and its parent company, the non-profit OpenAI Inc.

Is NVIDIA ChatGPT

ChatRTX is a demo app that lets you personalize a GPT large language model (LLM) connected to your own content—docs, notes, images, or other data. Leveraging retrieval-augmented generation (RAG), TensorRT-LLM, and RTX acceleration, you can query a custom chatbot to quickly get contextually relevant answers.Chat with RTX is a secure alternative that is easily accessible and free, making it a game-changer in the AI chat landscape. Discover more about this revolutionary tool and how to install it for yourself.Also keep in mind that a single GPU like the NVIDIA RTX 3090 or A5000 can provide significant performance and may be enough for your application. Having 2, 3, or even 4 GPUs in a workstation can provide a surprising amount of compute capability and may be sufficient for even many large problems. Kick-start your AI journey with access to NVIDIA AI workflows—for free.

Why does ChatGPT use GPU : A GPU, with its highly parallel architecture, excels at handling numerous concurrent tasks, making it a powerhouse for data-parallel workloads such as deep learning and graphics processing.

Does ChatGPT use CUDA : In real-world applications like ChatGPT, deep learning frameworks like PyTorch handle the intricacies of CUDA, making it accessible to developers without needing to write CUDA code directly.

How many GPUs does GPT-4 use

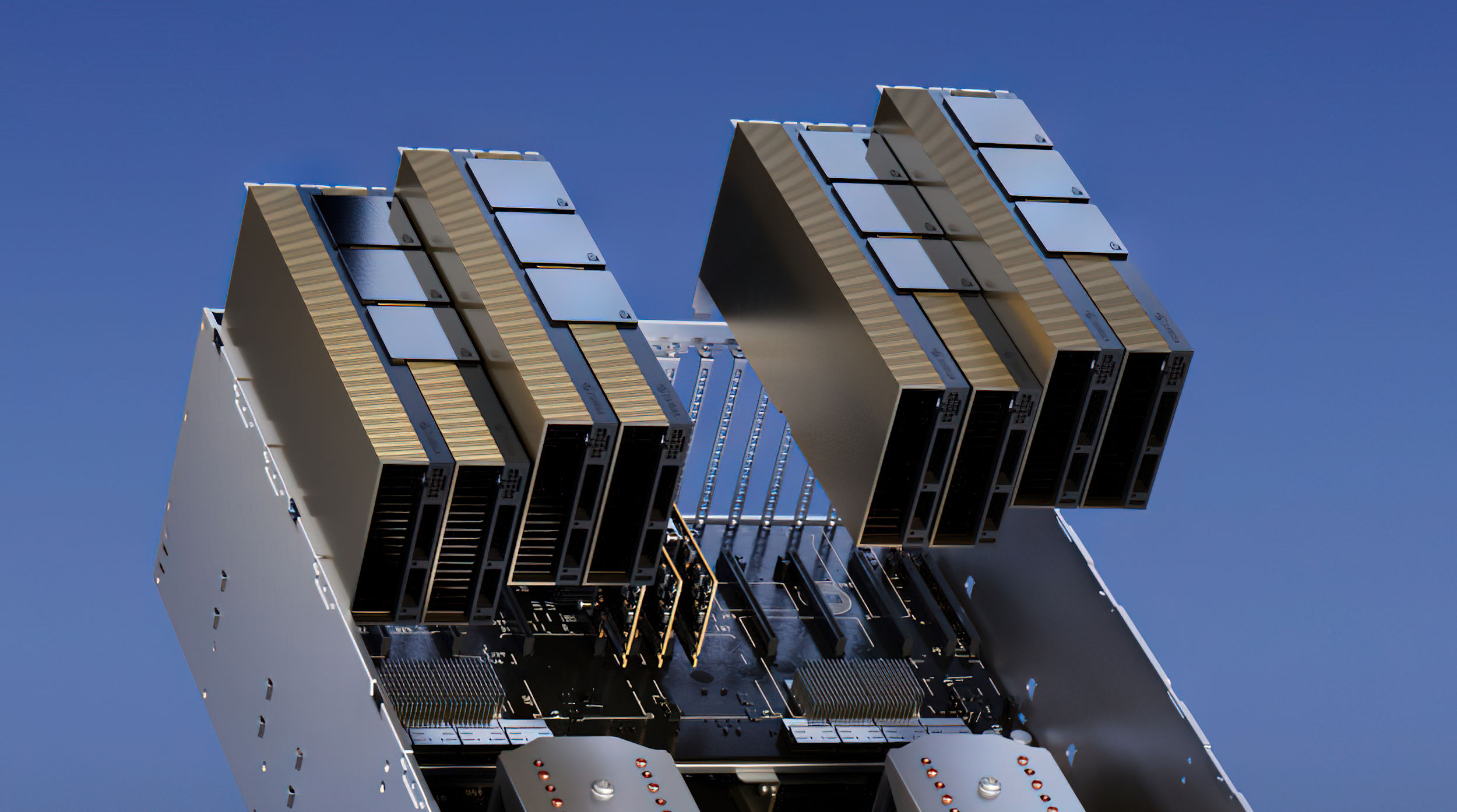

For inference, GPT-4: Runs on clusters of 128 A100 GPUs. Leverages multiple forms of parallelism to distribute processing. Nvidia's most obvious challengers are Advanced Micro Devices and Intel.Of course, while Tesla does have a custom FSD chip on board its vehicles, it also uses Nvidia chips to for training those AI autonomy models. Tesla CEO Elon Musk has pointed to Tesla's full self-driving mode as a key determinant of Tesla's future value.

What network does ChatGPT use : pretrained transformer neural network

In the case of ChatGPT, the architecture is based on pretrained transformer neural network, which is designed to process sequential data such as natural language.

Antwort Does ChatGPT use Nvidia? Weitere Antworten – Does OpenAI use Nvidia

OpenAI is now among the biggest consumers of Nvidia servers clusters on the planet, and the performance of its Infiniband equipment apparently has been unreliable at times, prompting the startup to move away from it.Inference. Drive breakthrough AI inference performance. NVIDIA offers performance, efficiency, and responsiveness critical to powering the next generation of AI inference—in the cloud, in the data center, at the network edge, and in embedded devices.Developers can also tap into the potential of Chat with RTX. Built upon the open-source TensorRT-LLM RAG Developer Reference Project, the app serves as a springboard for crafting custom RAG-based applications that further harness the power of RTX acceleration.

What is generative AI in Nvidia : Generative AI describes technologies that are used to generate new content based on a variety of inputs. In recent time, Generative AI involves the use of neural networks to identify patterns and structures within existing data to generate new content.

Is ChatGPT using Nvidia

It was developed by NVIDIA and Microsoft Azure, in collaboration with OpenAI, to host ChatGPT and other large language models (LLMs) at any scale.

Does ChatGPT run on CPU or GPU : ChatGPT relies heavily on GPUs for its AI training, as they can handle massive amounts of data and computations faster than CPUs.

Nvidia has identified Chinese tech company Huawei as one of its top competitors in various categories such as chip production, AI and cloud services.

OpenAI LP

Chat GPT is owned by OpenAI LP, an artificial intelligence research lab consisting of the for-profit OpenAI LP and its parent company, the non-profit OpenAI Inc.

Is NVIDIA ChatGPT

ChatRTX is a demo app that lets you personalize a GPT large language model (LLM) connected to your own content—docs, notes, images, or other data. Leveraging retrieval-augmented generation (RAG), TensorRT-LLM, and RTX acceleration, you can query a custom chatbot to quickly get contextually relevant answers.Chat with RTX is a secure alternative that is easily accessible and free, making it a game-changer in the AI chat landscape. Discover more about this revolutionary tool and how to install it for yourself.Also keep in mind that a single GPU like the NVIDIA RTX 3090 or A5000 can provide significant performance and may be enough for your application. Having 2, 3, or even 4 GPUs in a workstation can provide a surprising amount of compute capability and may be sufficient for even many large problems.

Kick-start your AI journey with access to NVIDIA AI workflows—for free.

Why does ChatGPT use GPU : A GPU, with its highly parallel architecture, excels at handling numerous concurrent tasks, making it a powerhouse for data-parallel workloads such as deep learning and graphics processing.

Does ChatGPT use CUDA : In real-world applications like ChatGPT, deep learning frameworks like PyTorch handle the intricacies of CUDA, making it accessible to developers without needing to write CUDA code directly.

How many GPUs does GPT-4 use

For inference, GPT-4: Runs on clusters of 128 A100 GPUs. Leverages multiple forms of parallelism to distribute processing.

Nvidia's most obvious challengers are Advanced Micro Devices and Intel.Of course, while Tesla does have a custom FSD chip on board its vehicles, it also uses Nvidia chips to for training those AI autonomy models. Tesla CEO Elon Musk has pointed to Tesla's full self-driving mode as a key determinant of Tesla's future value.

What network does ChatGPT use : pretrained transformer neural network

In the case of ChatGPT, the architecture is based on pretrained transformer neural network, which is designed to process sequential data such as natural language.