Intel doesn't support CUDA drivers yet in any of its GPUs. Although you can find some possible workarounds like this. If your primary motive is for machine learning based tasks, you can still consider using Google Colab or its likes.Unlike OpenCL, CUDA-enabled GPUs are only available from Nvidia as it is proprietary. Attempts to implement CUDA on other GPUs include: Project Coriander: Converts CUDA C++11 source to OpenCL 1.2 C. A fork of CUDA-on-CL intended to run TensorFlow. CU2CL: Convert CUDA 3.2 C++ to OpenCL C.Unmodified NVIDIA CUDA apps can now run on AMD GPUs thanks to ZLUDA.

Can I run CUDA on CPU : The CUDA code cannot run directly on the CPU but can be emulated. Threads are computed in parallel as part of a vectorized loop.

Do all GPUs support CUDA

CUDA is a standard feature in all NVIDIA GeForce, Quadro, and Tesla GPUs as well as NVIDIA GRID solutions.

Does CUDA need GPU : OpenCL's code can be run on both GPU and CPU whilst CUDA's code is only executed on GPU. CUDA is much faster on Nvidia GPUs and is the priority of machine learning researchers. Read more for an in-depth comparison of CUDA vs OpenCL.

CUDA is specifically designed for Nvidia's GPUs however, OpenCL works on Nvidia and AMD's GPUs. OpenCL's code can be run on both GPU and CPU whilst CUDA's code is only executed on GPU. Using Intel GPUs in OpenVINO Model Server and the benefits

Integration of Intel GPUs with OpenVINO Model Server (OVMS) brings about notable advantages in the realm of deep learning and artificial intelligence.

How do I know if my GPU can run CUDA

If you have an NVIDIA card that is listed in https://developer.nvidia.com/cuda-gpus, that GPU is CUDA-capable.CUDA – NVIDIA

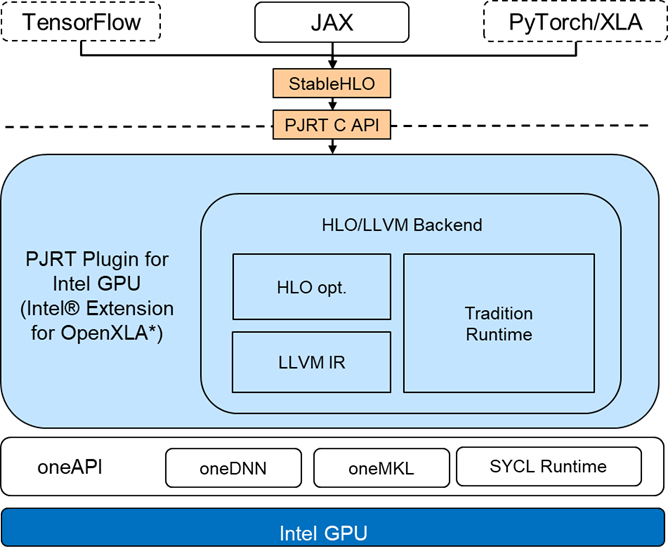

CUDA is supported on Windows and Linux and requires a Nvidia graphics cards with compute capability 3.0 and higher. To make sure your GPU is supported, see the list of Nvidia graphics cards with the compute capabilities and supported graphics cards.The latest Intel® Extension for PyTorch* release introduces XPU solution optimizations. XPU is a device abstraction for Intel heterogeneous computation architectures, that can be mapped to CPU, GPU, FPGA, or other accelerators. The optimizations include: Support for Intel GPUs. Intel Arc Alchemist GPUs are equipped with Xe Matrix Extensions (or XMX) cores, which are essentially Intel's version of Nvidia's Tensor cores. These cores can accelerate AI workloads like Stable Diffusion.

Can I use Intel GPU for deep learning : Intel GPUs excel in parallel processing, specifically accelerating matrix math operations essential for deep learning models. OVMS efficiently utilizes this parallelism to process multiple inference requests concurrently, enhancing overall throughput.

Can TensorFlow run on Intel GPU : Recently, Intel released the Intel® Extension for TensorFlow*, a plugin that allows TF DL workloads to run on Intel GPUs, including experimental support for the Intel Arc A-Series GPUs running on both native-Linux* and Windows* Subsystem for Linux 2 (WSL2).

Can PyTorch run on an Intel GPU

The Intel® Extension for PyTorch* for GPU extends PyTorch with up-to-date features and optimizations for an extra performance boost on Intel Graphics cards. This article delivers a quick introduction to the Extension, including how to use it to jumpstart your training and inference workloads. One dynamic at play was that some cloud service providers used their budgets last year to replace expensive Nvidia GPUs in existing systems rather than buying entirely new systems, which dragged down Intel CPU sales, Patrick Moorhead, president and principal analyst at Moor Insights & Strategy, recently told CRN.Intel® Extension for TensorFlow*

Through seamless integration with TensorFlow framework, it allows Intel XPU (GPU, CPU, etc.) devices readily accessible to TensorFlow developers.

Can Intel beat Nvidia in AI : Intel probably won't soar past Nvidia to become the world's biggest AI chipmaker, but the Gaudi 3 could offer the company a much-needed boost to earnings.

Antwort Can Intel GPU run CUDA? Weitere Antworten – Can I use CUDA with an Intel GPU

Intel doesn't support CUDA drivers yet in any of its GPUs. Although you can find some possible workarounds like this. If your primary motive is for machine learning based tasks, you can still consider using Google Colab or its likes.Unlike OpenCL, CUDA-enabled GPUs are only available from Nvidia as it is proprietary. Attempts to implement CUDA on other GPUs include: Project Coriander: Converts CUDA C++11 source to OpenCL 1.2 C. A fork of CUDA-on-CL intended to run TensorFlow. CU2CL: Convert CUDA 3.2 C++ to OpenCL C.Unmodified NVIDIA CUDA apps can now run on AMD GPUs thanks to ZLUDA.

Can I run CUDA on CPU : The CUDA code cannot run directly on the CPU but can be emulated. Threads are computed in parallel as part of a vectorized loop.

Do all GPUs support CUDA

CUDA is a standard feature in all NVIDIA GeForce, Quadro, and Tesla GPUs as well as NVIDIA GRID solutions.

Does CUDA need GPU : OpenCL's code can be run on both GPU and CPU whilst CUDA's code is only executed on GPU. CUDA is much faster on Nvidia GPUs and is the priority of machine learning researchers. Read more for an in-depth comparison of CUDA vs OpenCL.

CUDA is specifically designed for Nvidia's GPUs however, OpenCL works on Nvidia and AMD's GPUs. OpenCL's code can be run on both GPU and CPU whilst CUDA's code is only executed on GPU.

Using Intel GPUs in OpenVINO Model Server and the benefits

Integration of Intel GPUs with OpenVINO Model Server (OVMS) brings about notable advantages in the realm of deep learning and artificial intelligence.

How do I know if my GPU can run CUDA

If you have an NVIDIA card that is listed in https://developer.nvidia.com/cuda-gpus, that GPU is CUDA-capable.CUDA – NVIDIA

CUDA is supported on Windows and Linux and requires a Nvidia graphics cards with compute capability 3.0 and higher. To make sure your GPU is supported, see the list of Nvidia graphics cards with the compute capabilities and supported graphics cards.The latest Intel® Extension for PyTorch* release introduces XPU solution optimizations. XPU is a device abstraction for Intel heterogeneous computation architectures, that can be mapped to CPU, GPU, FPGA, or other accelerators. The optimizations include: Support for Intel GPUs.

Intel Arc Alchemist GPUs are equipped with Xe Matrix Extensions (or XMX) cores, which are essentially Intel's version of Nvidia's Tensor cores. These cores can accelerate AI workloads like Stable Diffusion.

Can I use Intel GPU for deep learning : Intel GPUs excel in parallel processing, specifically accelerating matrix math operations essential for deep learning models. OVMS efficiently utilizes this parallelism to process multiple inference requests concurrently, enhancing overall throughput.

Can TensorFlow run on Intel GPU : Recently, Intel released the Intel® Extension for TensorFlow*, a plugin that allows TF DL workloads to run on Intel GPUs, including experimental support for the Intel Arc A-Series GPUs running on both native-Linux* and Windows* Subsystem for Linux 2 (WSL2).

Can PyTorch run on an Intel GPU

The Intel® Extension for PyTorch* for GPU extends PyTorch with up-to-date features and optimizations for an extra performance boost on Intel Graphics cards. This article delivers a quick introduction to the Extension, including how to use it to jumpstart your training and inference workloads.

One dynamic at play was that some cloud service providers used their budgets last year to replace expensive Nvidia GPUs in existing systems rather than buying entirely new systems, which dragged down Intel CPU sales, Patrick Moorhead, president and principal analyst at Moor Insights & Strategy, recently told CRN.Intel® Extension for TensorFlow*

Through seamless integration with TensorFlow framework, it allows Intel XPU (GPU, CPU, etc.) devices readily accessible to TensorFlow developers.

Can Intel beat Nvidia in AI : Intel probably won't soar past Nvidia to become the world's biggest AI chipmaker, but the Gaudi 3 could offer the company a much-needed boost to earnings.